Vault

Enable performance replication

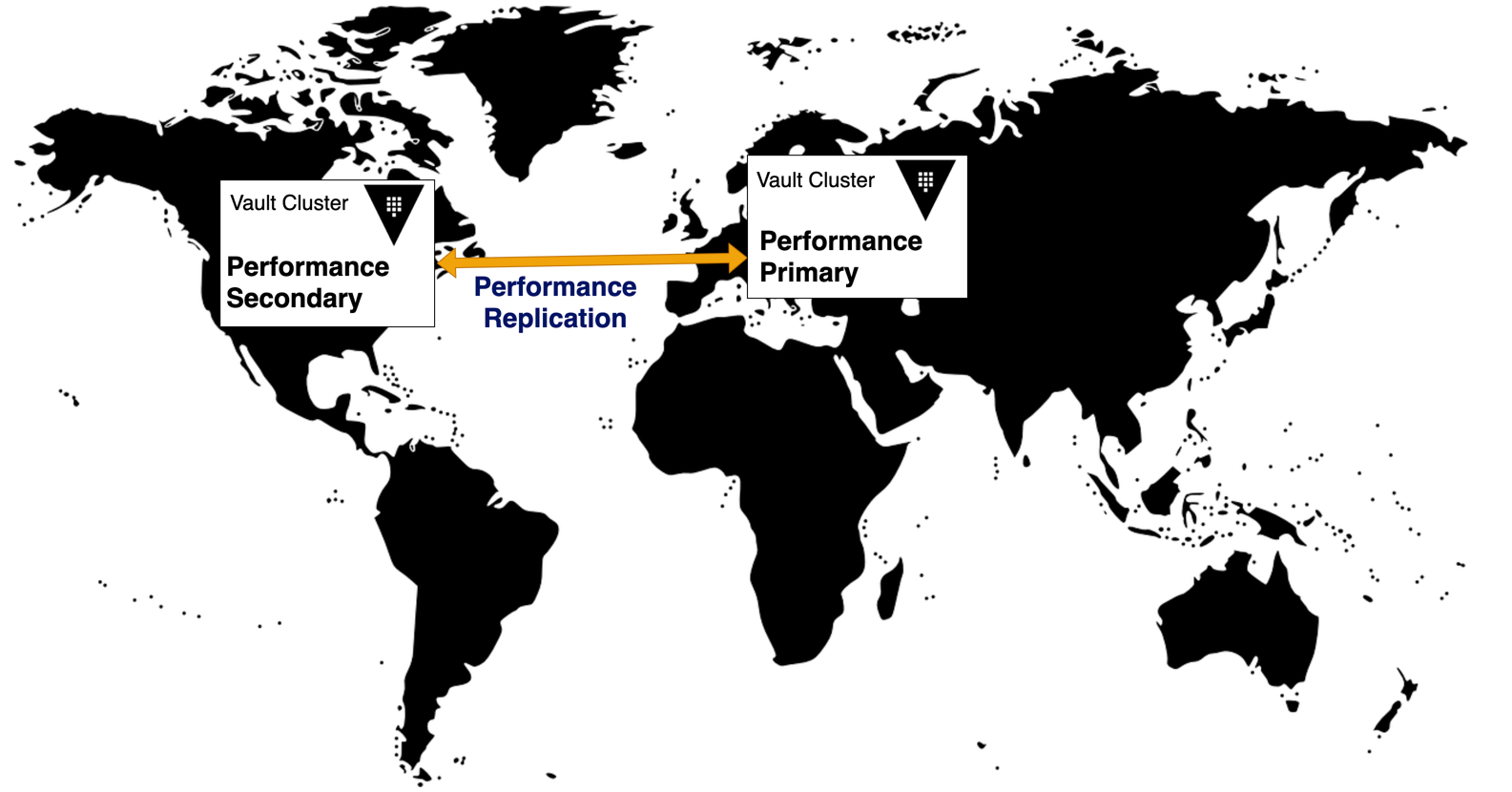

Scaling your Vault cluster to meet performance demands is critical to ensure workloads operate efficiently. Vault Enterprise and HCP Vault Dedicated support multi-region deployments so you can replicate data to regional Vault clusters to support local workloads.

Vault Enterprise offers two types of replication - disaster recovery and performance replication. If you are unfamiliar with Vault replication concepts, please review the Vault enterprise replication documentation.

Challenge

Your organization runs workloads in different regions. When operating a Vault cluster in a single region you observed increased latency from remote workloads causing delays and performance issues.

Solution

Vault Enterprise Performance Replication replicates data to Vault clusters in other regions allowing workloads in those regions to authenticate and consume Vault with reduced latency compared to accessing a Vault over long distances.

Vault performance replication starts with a primary cluster, and replicates to

one or more secondary clusters. Unlike disaster recovery replication, secondary

clusters maintain their own tokens and leases while sharing the Vault

configuration from the primary cluster.

Prerequisites

This intermediate tutorial assumes that you have some knowledge of working with Vault and Docker.

You will need the following resources to complete the tutorial's hands-on scenario:

- A Vault Enterprise license

- Vault Enterprise binary installed on your

PATHfor CLI operations. - Docker installed.

Lab setup

Create a working directory to store files used in this tutorial.

$ export HC_LEARN_LAB=/tmp/learn-vault-lab && mkdir $HC_LEARN_LABSwitch to the new directory. All steps in this tutorial should be run from this directory.

$ cd $HC_LEARN_LABCreate directories for the Vault configuration an integrated storage data for each cluster.

$ mkdir -p cluster-pri/config cluster-pri/data cluster-sec/config cluster-sec/dataCreate an environment variable with your Vault Enterprise license.

Example:

$ export VAULT_LICENSE=02MV.....snip...LMN0dCreate a Docker network to use for this tutorial. This network is created to replicate two Vault clusters with network connectivity between them.

$ docker network create learn-vault a4cc891f903e8f20c4d5a40f2e9dfd77bb56793a06806e54fd4d228a8243d058

Start primary cluster

Create a configuration file for the primary cluster.

$ cat > cluster-pri/config/vault-server.hcl <<EOF ui = true listener "tcp" { tls_disable = 1 address = "[::]:8200" cluster_address = "[::]:8201" } storage "raft" { path = "/vault/file" } EOFStart the primary cluster.

$ docker run \ --name=vault-enterprise-cluster-pri \ --hostname=cluster-pri \ --network=learn-vault \ --publish 8200:8200 \ --env VAULT_ADDR="http://localhost:8200" \ --env VAULT_CLUSTER_ADDR="http://cluster-pri:8201" \ --env VAULT_API_ADDR="http://cluster-pri:8200" \ --env VAULT_RAFT_NODE_ID="cluster-pri" \ --env VAULT_LICENSE="$VAULT_LICENSE" \ --volume $PWD/cluster-pri/config/:/vault/config \ --volume $PWD/cluster-pri/data/:/vault/file:z \ --cap-add=IPC_LOCK \ --detach \ --rm \ hashicorp/vault-enterprise vault server -config=/vault/config/vault-server.hclExample output:

Unable to find image 'hashicorp/vault-enterprise:latest' locally latest: Pulling from hashicorp/vault-enterprise 73baa7ef167e: Pull complete 15c30ea739d2: Pull complete 7ac0bfe40225: Pull complete ca40e0834a07: Pull complete 8496f662e13b: Pull complete 6bfd5d33bad3: Pull complete 33df159548ca: Pull complete Digest: sha256:57a7ceffed6bb44ece54511a3d3507b099c3b0adc889fac3514beb0aaaed8601 Status: Downloaded newer image for hashicorp/vault-enterprise:latest 586e9996620170e13baef6bc0f8c614852cd84a837fd65bdd51df2aa506b6d6dVerify the primary cluster container has started.

$ docker ps -f name=vault-enterprise --format "table {{.Names}}\t{{.Status}}" NAMES STATUS vault-enterprise-cluster-pri Up 3 secondsInitialize the primary cluster and write the unseal keys to a file.

Writing the unseal key to a file is used to simplify the tutorial steps. For production clusters, the unseal keys must be kept secure.

$ vault operator init \ -address=http://127.0.0.1:8200 \ -key-shares=1 \ -key-threshold=1 \ > $PWD/cluster-pri/.initExport an environment variable with the primary clusters unseal key.

$ export CLUSTER_PRI_UNSEAL_KEY="$(grep 'Unseal Key 1' cluster-pri/.init | awk '{print $NF}')" && echo $CLUSTER_PRI_UNSEAL_KEY 037yeI1EmdcgtNy+h2+vPGCyEWOYecwrarW/lBqsiSA=The unseal key is printed to ensure the value was properly set. If no key is returned, verify the key is present in the

.initfile.Export an environment variable with the primary clusters root token.

$ export CLUSTER_PRI_ROOT_TOKEN="$(grep 'Initial Root Token' cluster-pri/.init | awk '{print $NF}')" && echo $CLUSTER_PRI_ROOT_TOKEN hvs.aQrUNCbqnwq3t4tw5K6UcrWFThe root token is printed to ensure the value was properly set. If no token is returned, verify the key is present in the

.initfile.Unseal the primary cluster.

$ vault operator unseal -address=http://127.0.0.1:8200 $CLUSTER_PRI_UNSEAL_KEYExample output:

Key Value --- ----- Seal Type shamir Initialized true Sealed false Total Shares 1 Threshold 1 Version 1.17.1+ent Build Date 2024-06-25T16:39:02Z Storage Type raft Cluster Name vault-cluster-4b364521 Cluster ID 0605f249-b686-6282-88cd-35d70f1b0113 HA Enabled true HA Cluster n/a HA Mode standby Active Node Address <none> Raft Committed Index 54 Raft Applied Index 53The

Sealedstatus is nowfalse, the cluster is unsealed and ready to be configured.Export an environment variable with the address of the primary cluster.

$ export PRI_ADDR=http://127.0.0.1:8200

Start secondary cluster

Create a configuration file for the primary cluster.

$ cat > cluster-sec/config/vault-server.hcl <<EOF ui = true listener "tcp" { tls_disable = 1 address = "[::]:8220" cluster_address = "[::]:8221" } storage "raft" { path = "/vault/file" } EOFStart the secondary cluster.

$ docker run \ --name=vault-enterprise-cluster-sec \ --hostname=cluster-sec \ --network=learn-vault \ --publish 8220:8220 \ --env VAULT_ADDR="http://localhost:8220" \ --env VAULT_CLUSTER_ADDR="http://cluster-sec:8221" \ --env VAULT_API_ADDR="http://cluster-sec:8220" \ --env VAULT_RAFT_NODE_ID="cluster-sec" \ --env VAULT_LICENSE="$VAULT_LICENSE" \ --volume $PWD/cluster-sec/config/:/vault/config \ --volume $PWD/cluster-sec/data/:/vault/file:z \ --cap-add=IPC_LOCK \ --detach \ --rm \ hashicorp/vault-enterprise vault server -config=/vault/config/vault-server.hclExample output:

586e9996620170e13baef6bc0f8c614852cd84a837fd65bdd51df2aa506b6d6dVerify the secondary cluster container has started.

$ docker ps -f name=vault-enterprise --format "table {{.Names}}\t{{.Status}}" NAMES STATUS vault-enterprise-cluster-sec Up 8 seconds vault-enterprise-cluster-pri Up 7 minutesInitialize the secondary cluster and write the unseal keys to a file.

$ vault operator init \ -address=http://127.0.0.1:8220 \ -key-shares=1 \ -key-threshold=1 \ > $PWD/cluster-sec/.initExport an environment variable with the secondary clusters unseal key.

$ export CLUSTER_SEC_UNSEAL_KEY="$(grep 'Unseal Key 1' cluster-sec/.init | awk '{print $NF}')" && echo $CLUSTER_SEC_UNSEAL_KEY 037yeI1EmdcgtNy+h2+vPGCyEWOYecwrarW/lBqsiSA=Export an environment variable with the secondary clusters root token.

$ export CLUSTER_SEC_ROOT_TOKEN="$(grep 'Initial Root Token' cluster-sec/.init | awk '{print $NF}')" && echo $CLUSTER_SEC_ROOT_TOKEN hvs.aQrUNCbqnwq3t4tw5K6UcrWFUnseal the secondary cluster.

$ vault operator unseal -address=http://127.0.0.1:8220 $CLUSTER_SEC_UNSEAL_KEYExample output:

Key Value --- ----- Seal Type shamir Initialized true Sealed false Total Shares 1 Threshold 1 Version 1.17.1+ent Build Date 2024-06-25T16:39:02Z Storage Type raft Cluster Name vault-cluster-4b364521 Cluster ID 0605f249-b686-6282-88cd-35d70f1b0113 HA Enabled true HA Cluster n/a HA Mode standby Active Node Address <none> Raft Committed Index 54 Raft Applied Index 53The

Sealedstatus is nowfalse, the cluster is unsealed and ready to be configured.Export an environment variable with the address of the secondary cluster.

$ export SEC_ADDR=http://127.0.0.1:8220

Configure the primary cluster

Before enabling performance replication, create a superpolicy policy and enable

userpass auth method with superuser user on the primary cluster.

Log into the primary cluster.

$ echo $CLUSTER_PRI_ROOT_TOKEN | xargs vault login -address=$PRI_ADDR Key Value --- ----- token hvs.aQrUNCbqnwq3t4tw5K6UcrWF token_accessor VzzI18zBxpMBWUo1e3yruLFe token_duration ∞ token_renewable false token_policies ["root"] identity_policies [] policies ["root"]Create create a

superpolicypolicy.$ vault policy write -address=$PRI_ADDR superpolicy -<<EOF path "*" { capabilities = ["create", "read", "update", "delete", "list", "sudo"] } EOFExample output:

Success! Uploaded policy: superpolicyEnable the

userpassauth method.$ vault auth enable -address=$PRI_ADDR userpass Success! Enabled userpass auth method at: userpass/Create a new user named

superuserin userpass and attach thesuperpolicypolicy.$ vault write -address=$PRI_ADDR auth/userpass/users/superuser password="vaultMagic" policies="superpolicy" Success! Data written to: auth/userpass/users/superuserEnable the

k/vsecrets engine namedreplicatedkv.$ vault secrets enable -address=$PRI_ADDR -path=replicatedkv kv-v2 Success! Enabled the kv-v2 secrets engine at: replicatedkv/When you configure performance replication, Vault will replicate this mount to the secondary cluster.

Enable the

k/vsecrets engine namedlocalkv, and mark the secret engine as local.$ vault secrets enable -address=$PRI_ADDR -path=localkv -local kv-v2 Success! Enabled the kv-v2 secrets engine at: localkv/When you configure performance replication, this mount is not replicated to the secondary cluster because you marked as local only using the

-localflag.Enable performance replication on the primary cluster.

$ vault write -address=$PRI_ADDR -f sys/replication/performance/primary/enable WARNING! The following warnings were returned from Vault: * This cluster is being enabled as a primary for replication. Vault will be unavailable for a brief period and will resume service shortly.Create a secondary bootstrap token on the primary cluster and store the token as a variable. The token value will be returned as part of the tutorial to ensure the command completed successfully.

$ SEC_BOOTSTRAP=$(vault write -address=$PRI_ADDR sys/replication/performance/primary/secondary-token id=secondary -format=json | jq -r '.wrap_info | .token') && echo Token: $SEC_BOOTSTRAP Token: eyJhbGciOiJFUzUxMiIsInR5cCI6IkpXVCJ9...snip...-bz8obglE1OI2GDZk6rOWoSj9Rxvx2TZ4n8YVThe value for

idis opaque to Vault and can be any identifying value you want. You can use theidto revoke the secondary. You can see the `id when you read the replication status on the primary. You will get back a wrapped response, except that the token will be a JWT instead of UUID-formatted random bytes.

Configure the secondary cluster

Log into the secondary cluster.

$ echo $CLUSTER_SEC_ROOT_TOKEN | xargs vault login -address=$SEC_ADDR Key Value --- ----- token hvs.s4Yb7J80ZtglKo9WlYNR57mu token_accessor HIaozOx30nSKO6hSlspa8CIR token_duration ∞ token_renewable false token_policies ["root"] identity_policies [] policies ["root"]Activate a secondary using the token generated on the primary cluster.

$ vault write -address=$SEC_ADDR sys/replication/performance/secondary/enable token=$SEC_BOOTSTRAP WARNING! The following warnings were returned from Vault: * Vault has successfully found secondary information; it may take a while to perform setup tasks. Vault will be unavailable until these tasks and initial sync complete.The secondary will use the address embedded in the bootstrap token, which is the primary's redirect address to make a connection to the primary. If the primary has no redirect address (for instance, if it's not in an HA cluster), you'll need to set the

primary_api_addrparameter to specify the primary's API address at secondary enable time.Once the secondary is activated and has bootstrapped, it will be ready for service and will maintain state with the primary. It is safe to seal/shutdown the primary and/or secondary; when both are available again, they will synchronize back into a replicated state.

Authenticate to the secondary cluster using the

userpassauth method you previously enabled on the primary cluster. EntervaultMagicwhen prompted for the password.$ vault login -address=$SEC_ADDR -method=userpass username=superuser Password (will be hidden): Success! You are now authenticated. The token information displayed below is already stored in the token helper. You do NOT need to run "vault login" again. Future Vault requests will automatically use this token. Key Value --- ----- token hvs.CAESIKZsAc7fnuz_VP8wBHj7U2TdtDZpqRfSUGe-SZTcFGC-GiEKHGh2cy5YWEJSZXIzaEg3a3F5RDJMdWtQTzhMbDAQvQI token_accessor xTlRFFdIdtI2XwPVLEOtTv55 token_duration 768h token_renewable true token_policies ["default" "superpolicy"] identity_policies [] policies ["default" "superpolicy"] token_meta_username superuserOn a production system, after a secondary is activated, enabled auth methods should be used to get tokens with appropriate policies, as policies and auth method configuration are replicated.

Verify the replication status.

$ vault read -format=json -address=$SEC_ADDR sys/replication/status | jq { "request_id": "b62afabd-550f-5396-0ea3-e6c68c7799a8", "lease_id": "", "lease_duration": 0, "renewable": false, "data": { "dr": { "mode": "disabled" }, "performance": { "cluster_id": "8530b742-22da-00c7-52da-3c6cd6cc868c", "connection_state": "ready", "corrupted_merkle_tree": false, "known_primary_cluster_addrs": [ "https://cluster-pri:8201" ], "last_corruption_check_epoch": "-62135596800", "last_reindex_epoch": "1719956509", "last_remote_wal": 7960, "last_start": "2024-07-02T21:41:49Z", "merkle_root": "82d3abb152158f116de193cdbaac2c2dc3624be5", "mode": "secondary", "primaries": [ { "api_address": "http://cluster-pri:8200", ...snip...The replication status shows this is a

secondarycluster and the address of theprimaries.List the enabled secrets engines.

$ vault secrets list -address=$SEC_ADDR Path Type Accessor Description ---- ---- -------- ----------- cubbyhole/ cubbyhole cubbyhole_da096a10 per-token private secret storage identity/ identity identity_962587a4 identity store replicatedkv/ kv kv_ec655082 n/a sys/ system system_e413f153 system endpoints used for control, policy and debuggingThe

replicatedkvsecret engine, which you enabled on the primary cluster is replicated to the secondary cluster.The

localkvsecret engine is not available on the secondary because you marked it as a local only mount using the-localflag.

Manage replicated mounts

There are many regional data sovereignty requirements that must be taken into consideration when using replication. Consider a scenario where secrets stored on the primary cluster need to remain in the region where the cluster operates.

Path filters allow you to control which mounts are replicated across clusters and physical regions. With a path filter you can select which mounts will be replicated as part of a performance replication relationship.

By default, all non-local mounts and associated data are moved as part of replication. The paths filter feature enables users restrict which mounts are replicated, thereby allowing users to further control the movement of secrets across their infrastructure.

Path filters are also applied to mounts that have already been replicated. In

this tutorial the replicatedkv secret engine was replicated from the primary

cluster to the secondary.

You have just been given a new requirement that states the replicatedkv secret

engine must now stay only on the primary cluster. You can add a path filter

which will remove the replicated mount from the secondary.

Log back in to the primary cluster.

$ echo $CLUSTER_PRI_ROOT_TOKEN | xargs vault login -address=$PRI_ADDR Key Value --- ----- token hvs.aQrUNCbqnwq3t4tw5K6UcrWF token_accessor VzzI18zBxpMBWUo1e3yruLFe token_duration ∞ token_renewable false token_policies ["root"] identity_policies [] policies ["root"]Add a path filter to deny the

replicatedkvsecret engine from being replicated.$ vault write -address=$PRI_ADDR \ sys/replication/performance/primary/paths-filter/secondary \ mode="deny" paths="replicatedkv"Example output:

Success! Data written to: sys/replication/performance/primary/paths-filter/secondaryThe ID

secondaryat the end of the path must match the ID used when configuring performance replication on the primary cluster.Log back in to the secondary cluster. Enter

vaultMagicwhen prompted for the password.$ vault login -address=$SEC_ADDR -method=userpass username=superuser Password (will be hidden): Success! You are now authenticated. The token information displayed below is already stored in the token helper. You do NOT need to run "vault login" again. Future Vault requests will automatically use this token. Key Value --- ----- token hvs.CAESIKZsAc7fnuz_VP8wBHj7U2TdtDZpqRfSUGe-SZTcFGC-GiEKHGh2cy5YWEJSZXIzaEg3a3F5RDJMdWtQTzhMbDAQvQI token_accessor xTlRFFdIdtI2XwPVLEOtTv55 token_duration 768h token_renewable true token_policies ["default" "superpolicy"] identity_policies [] policies ["default" "superpolicy"] token_meta_username superuserList the secret engines.

$ vault secrets list -address=$SEC_ADDR Path Type Accessor Description ---- ---- -------- ----------- cubbyhole/ cubbyhole cubbyhole_da096a10 per-token private secret storage identity/ identity identity_962587a4 identity store sys/ system system_e413f153 system endpoints used for control, policy and debuggingThe

replicatedkvsecret engine is no longer available on the secondary cluster.

Considerations for performance replication

Vault's performance replication model is intended to allow horizontally scaling Vault's functions rather than to act in a strict Disaster Recovery (DR) capacity.

As a result, Vault performance replication acts on static items within Vault, meaning information that is not part of Vault's lease-tracking system. In a practical sense, this means that all Vault information is replicated from the primary to secondaries except for tokens and secret leases.

Because token information must be checked and possibly rewritten with each use (e.g. to decrement its use count), replicated tokens would require every call to be forwarded to the primary, decreasing rather than increasing total Vault throughput.

Secret leases are tracked independently for two reasons: one, because every such lease is tied to a token and tokens are local to each cluster; and two, because tracking large numbers of leases is memory-intensive and tracking all leases in a replicated fashion could dramatically increase the memory requirements across all Vault nodes.

We believe that this performance replication model provides significant utility for horizontally scaling Vault's functionality. However, it does mean that certain principles must be kept in mind.

Always use the local cluster

First and foremost, when designing systems to take advantage of replicated Vault, you must ensure that they always use the same Vault cluster for all operations, as only that cluster will know about the client's Vault token.

Enabling a secondary wipes storage

Replication relies on having a shared keyring between primary and secondaries and also relies on having a shared understanding of the data store state. As a result, when replication is enabled, all of the secondary's existing storage will be wiped. This is irrevocable. Make a backup first if there is a remote chance you'll need some of this data at some future point.

Generally, activating as a secondary will be the first thing that is done upon setting up a new cluster for replication.

Replicated vs. local backend mounts

All backend mounts (of all types) that can be enabled within Vault default to being mounted as a replicated mount. This means that mounts cannot be enabled on a secondary, and mounts enabled on the primary will replicate to secondaries.

Mounts can also be marked local (via the -local flag on the Vault CLI or

setting the local parameter to true in the API). This can only be performed

at mount time; if a mount is local but should have been replicated, or vice

versa, you must disable the backend and mount a new instance at that path with

the local flag enabled. If you cannot start a new instance of a mount, you can

use a path filter to deny replication to one or more secondaries.

Local mounts do not propagate data from the primary to secondaries, and local mounts on secondaries do not have their data removed during the syncing process. The exception is during initial bootstrapping of a secondary from a state where replication is disabled; all data, including local mounts, is deleted at this time (as the encryption keys will have changed so data in local mounts would be unable to be read).

Audit devices

In normal Vault usage, if Vault has at least one audit device configured and is unable to successfully log to at least one device, it will block further requests.

Replicated audit mounts must be able to successfully log on all replicated clusters. For example, if using the file audit device, the configured path must be able to be written to by all secondaries. It may be useful to use at least one local audit mount on each cluster to prevent such a scenario.

Never have two primaries

The replication model is not designed for active-active usage and enabling two primaries should never be done, as it can lead to data loss if they or their secondaries are ever reconnected.

Disaster recovery

Local backend mounts are not replicated and their use will require existing DR mechanisms if DR is necessary in your implementation.

If you need true DR, review the Disaster recovery replication tutorial.

Clean up

Stop the Vault Enterprise containers.

$ docker stop vault-enterprise-cluster-sec vault-enterprise-cluster-pri vault-enterprise-cluster-sec vault-enterprise-cluster-priDelete the Docker network created for this tutorial.

$ docker network rm learn-vault learn-vaultDelete the temporary directory created for this tutorial.

$ cd ~ && rm -rf $HC_LEARN_LABUnset environment variables created for this tutorial (or close the terminal).

$ unset VAULT_LICENSE CLUSTER_PRI_UNSEAL_KEY CLUSTER_SEC_UNSEAL_KEY PRI_ADDR SEC_ADDR CLUSTER_SEC_ROOT_TOKEN CLUSTER_PRI_ROOT_TOKEN