Cloud deployment (AWS, Azure, GCP virtual machines)

This section provides a detailed guide for manually installing a Nomad cluster on AWS, Azure, and GCP instances. This approach is suitable for organizations that require fine-grained control over their Nomad deployment or have specific compliance requirements that necessitate manual installation. Before proceeding, ensure you have read and have a solid understanding of Nomad architecture(opens in new tab) and Nomad deployment requirements(opens in new tab).

Architectural summary

As referenced in the Nomad architecture section(opens in new tab) each Nomad node in a cluster resides on a separate VM, with shared or dedicated tenancy both being acceptable. A single Nomad cluster deploys within a single region, with nodes distributed across all available availability zones (AZs). This design uses Nomad's redundancy zone feature and deploys a minimum of 3 voting server nodes and 3 non-voting server nodes across 3 AZs.

Server naming and IP addressing

For illustrative purposes, we use the following naming convention and IP addressing scheme for our Nomad servers:

| DNS name | IP address | Node type | Location |

|---|---|---|---|

| nomad-1.domain | 10.0.0.1 | Voting server | us-east-1a |

| nomad-2.domain | 10.0.0.2 | Voting server | us-east-1b |

| nomad-3.domain | 10.0.0.3 | Voting server | us-east-1c |

| nomad-4.domain | 10.0.0.4 | Non-voting server | us-east-1a |

| nomad-5.domain | 10.0.0.5 | Non-voting server | us-east-1b |

| nomad-6.domain | 10.0.0.6 | Non-voting server | us-east-1c |

| nomad.domain | 10.0.0.100 | NLB | (all zones) |

Note: These names and IP addresses are examples. Adjust them according to your specific environment and naming conventions.

Certificates

Create a standard X.509 certificate for installation on the Nomad servers. Follow your organization's process for creating a new certificate that matches the DNS record you intend to use for accessing Nomad. This guide assumes the conventions and uses self-signed certificates from the Nomad architecture(opens in new tab) and enable TLS tutorial(opens in new tab).

You need three files:

- CA Public certificate (

nomad-agent-ca.pem) - Server node certificate's private key: (

global-server-nomad-key.pem) - Server node public certificate: (

global-server-nomad.pem)

Distribute these certificates to the server nodes in a later section and used to secure communications between Nomad clients, servers, and the API.

A fourth certificate and key is also needed: global-cli-nomad.pem and global-cli-nomad-key.pem. Use these later in this guide to interact with the cluster. The global- prefix for the certificate and key assume these are for multi-region environments. You are able to generate certificates on a per-region basis, however In this guide, we are using a single certificate and key across all clusters globally.

While Nomad's TLS configuration is production ready, key management and rotation is a complex subject not covered by this guide. Vault(opens in new tab) is the suggested solution for key generation and management.

Firewall rules

Create firewall rules that allow these ports bidirectionally:

| Port | Name | Purpose | Protocol |

|---|---|---|---|

| 4646 | HTTP API | Used by clients and servers to serve the HTTP API | TCP only |

| 4647 | RPC | Used for internal RPC communication between client agents and servers, and for inter-server traffic | TCP only |

| 4648 | Serf WAN | Used by servers to gossip both over the LAN and WAN to other servers. Not required for Nomad clients to reach this address | TCP and UDP |

Load balancing the API and UI

- Ensure your load balancer is in front of all Nomad server nodes, across all availability zones. Ensure you have deployed all Nomad nodes in the correct subnets.

- Use TCP ports 4646 (HTTP API) and 4647 (RPC). The target groups associated with each of these listeners must contain all Nomad server nodes.

- Use the

/v1/agent/healthendpoint for health checking.

Download and install the Nomad CLI

To interact with your Nomad cluster, you need to install the Nomad binary. Follow the steps for your OS on the Install Nomad(opens in new tab) documentation page.

Obtain the Nomad Enterprise license file

Obtain the Nomad Enterprise license file from your HashiCorp account team. This file contains a license key unique to your environment. The file is named license.hclic or similar.

Keep this file available as you need it later in the installation process.

Platform-specific guidance

Select the tab below for further guidance and corresponding choices for your cloud service provider.

Deployment considerations

The official Nomad Enterprise HVD Module for deployment on AWS(opens in new tab) is available in the Terraform Registry.

For Nomad servers, we recommend using m5.xlarge or m5.2xlarge instance types to provide the necessary vCPU and RAM resources for Nomad. Instance types from other instance families are also usable, provided that they meet or exceed the resource recommendations.

When selecting instance types for Nomad clients, consider the resource requirements of your workloads. AWS offers a variety of instance families optimized for different use cases:

| Instance family | Use case | Workload examples |

|---|---|---|

| General purpose (M family) | Balanced compute, memory, and networking resources | Web servers, small-to-medium databases, dev/test environments |

| Compute-optimized (C family) | High-performance computing, batch processing, scientific modeling | CPU-intensive applications, video encoding, high-performance web servers |

| Memory-optimized (R family) | High-performance databases, distributed memory caches, real-time big data analytics | SAP HANA, Apache Spark, Presto |

| Storage-optimized (I, D families) | High I/O applications, large databases, data warehousing | NoSQL databases, data processing applications |

| GPU instances (P, G families) | AI, high-performance computing, rendering | AI training and inference, scientific simulations |

| NUMA-aware instances (X1, X1e families) | High-performance databases, in-memory databases, big data processing engines | SAP HANA, Apache Spark, Presto |

While you can use AWS Auto Scaling Groups to manually manage the number of Nomad clients, it is often more efficient to use the Nomad Autoscaler. The Nomad Autoscaler integrates directly with Nomad and provides more granular control over scaling decisions.

The Nomad Autoscaler can dynamically adjust the number of client instances based on various metrics, including job queue depth, CPU utilization, memory usage, and more. It can even scale different node pools independently, allowing you to maintain the right mix of instance types for your workloads.

As referenced in the Nomad architecture(opens in new tab), each Nomad node in a cluster resides on a separate EC2 instance, with shared or dedicated tenancy both being acceptable.

Nomad can be IOPS intensive as it writes chunks of data from memory to storage. Initially, we recommend EBS volumes with Provisioned IOPS SSD (io1 or io2) as they meet the disk performance needs for most use cases. These provide a balance of performance and manageability suitable for typical Nomad deployments.

As your cluster scales or experiences large batch job scheduling events, you may encounter storage performance limitations. In such scenarios, consider starting or transitioning to instances with local NVMe storage. While this can offer significant performance benefits, be aware that it requires additional OS-level configuration during instance setup. Evaluate the trade-off between enhanced performance and increased setup complexity, based on your specific workload demands and operational capabilities. Monitor your IOPS metrics using your preferred monitoring solution to identify when you are approaching storage performance limits. For detailed guidance on monitoring Nomad server storage metrics, refer to the Observability section of the Nomad Operating guide(opens in new tab).

When deploying in AWS, use an S3 bucket for storage of Automated Snapshots(opens in new tab). Deploy your S3 bucket in a different region from the compute instances, to allow the possibility of restoring the cluster in case of regional failure. Use S3 Versioning and MFA Delete to protect the snapshots from accidental or malicious deletion.

We recommended you make an IAM role used only for auto-joining nodes. The only required IAM permission is ec2:DescribeInstances. If omitting the region, cloud auto-join discovers the region via the EC2 metadata endpoint(opens in new tab). Visit Auto join AWS Provider(opens in new tab) for more information.

Deployment process

Create and connect to your instances

Launch two instances in each of the three AZs, for a total of six instances using the machine image of your choice. Ubuntu is the OS used in the following example. Adjust these to use

dnfon RHEL if needed.Ensure the IAM Instance Profile or Managed Service Identity is using the Cloud auto-join role permissions you previously created.

Ensure the instances are tagged with

tag_key=cloud-auto-join&tag_value=trueUpdate the OS

ssh -i /path/to/your-private-key ubuntu@your-vm-ip sudo apt update && sudo apt upgrade -y

Download and install Nomad

Refer to the HashiCorp Security page regarding secure download and verification of the Nomad single binary.

PRODUCT='nomad'

VERSION='1.9.1+ent'

OS_ARCH="$(uname -s | tr '[:upper:]' '[:lower:]')_$(uname -m | tr '[:upper:]' '[:lower:]')" # e.g. linux_x86_64

hashicorp_key_id='34365D9472D7468F'

hashicorp_key_fingerprint='C874011F0AB405110D02105534365D9472D7468F'

curl -#Ok --remote-name https://www.hashicorp.com/.well-known/pgp-key.txt

gpg --import pgp-key.txt

gpg --sign-key ${hashicorp_key_id}

gpg --fingerprint --list-signatures "HashiCorp Security" | tr -d ' ' | grep -q "${hashicorp_key_fingerprint}"

curl -#Ok --remote-name https://releases.hashicorp.com/"${PRODUCT}"/"${VERSION}"/"${PRODUCT}"_"${VERSION}"_"${OS_ARCH}".zip

curl -#Ok --remote-name https://releases.hashicorp.com/"${PRODUCT}"/"${VERSION}"/"${PRODUCT}"_"${VERSION}"_SHA256SUMS

curl -#Ok --remote-name https://releases.hashicorp.com/"${PRODUCT}"/"${VERSION}"/"${PRODUCT}"_"${VERSION}"_SHA256SUMS.sig

gpg --verify "${PRODUCT}"_"${VERSION}"_SHA256SUMS.sig "${PRODUCT}"_"${VERSION}"_SHA256SUMS

[[ "$(sha256sum ${PRODUCT}_${VERSION}_${OS_ARCH}.zip | awk '{print $1}')" != "$(grep ${PRODUCT}_${VERSION}_${OS_ARCH}.zip "${PRODUCT}"_"${VERSION}"_SHA256SUMS | awk '{print $1}')" ]] && echo "ALERT: VERIFICATION FAILED" || echo "DOWNLOAD VERIFICATION SUCCEEDED - PLEASE PROCEED TO UNZIP AND MOVE INTO PLACE" # stop if verification fails

unzip "${PRODUCT}"_"${VERSION}"_"${OS_ARCH}".zip

sudo mv "${PRODUCT}" /usr/local/bin/

sudo chmod -h 0755 /usr/local/bin/nomad

Follow the instructions in the documentation(opens in new tab) page to install Nomad from the official HashiCorp package repository for your OS.

Create necessary directories

sudo mkdir -p /opt/nomad/data

sudo mkdir -p /etc/nomad.d

sudo mkdir -p /etc/nomad.d/tls

Copy TLS certificates

Copy your TLS certificates to /etc/nomad.d/tls/:

sudo scp nomad-agent-ca.pem global-server-nomad-key.pem global-server-nomad.pem \

ubuntu@your-vm-ip:/etc/nomad.d/tls/

Copy license file

echo "02MV4UU43BK5..." >> /etc/nomad.d/license.hclic

Create gossip encryption key

nomad operator gossip keyring generate

Save the output of this key to use in the configuration file.

Create Nomad configuration file

Create /etc/nomad.d/nomad.hcl with the following content or supply your own. The example below includes:

- Cloud Auto-join(opens in new tab) which joins servers to the cluster based on tags instead of hard coded IPs or hostnames.

- Autopilot(opens in new tab) which handles automated upgrades

- Redundancy zones(opens in new tab): Nomad uses these values to partition the servers by redundancy zone, and keeps one voting server per zone. Extra servers in each zone stay as non-voters on standby and are promoted automatically if the active voter in that availability zone goes offline. We recommend matching the name of the AWS availability zone for simplicity. Change your config based on which AZ the instance resides in.

- TLS:(opens in new tab) enables TLS between nodes and for the API. At scale, it is recommended to use HashiCorp Vault(opens in new tab), however for starting out, it is acceptable to leverage signed certificates copied to the instance.

data_dir = "/opt/nomad/data"

bind_addr = "0.0.0.0" # CHANGE ME to listen only on the required IPs

server {

enabled = true

bootstrap_expect = 3

server_join {

retry_join = ["provider=aws tag_key=cloud-auto-join tag_value=true"]

}

redundancy_zone = "us-east-1b" # CHANGE ME

license_path = "/etc/nomad.d/license.hclic"

encrypt = "YOUR-GOSSIP-KEY" # Paste your key from the above "Create Gossip Encryption Key" step

}

acl {

enabled = true

}

tls {

http = true

rpc = true

ca_file = "/etc/nomad.d/tls/nomad-agent-ca.pem"

cert_file = "/etc/nomad.d/tls/global-server-nomad-key.pem"

key_file = "/etc/nomad.d/tls/global-server-nomad.pem"

}

autopilot {

cleanup_dead_servers = true

last_contact_threshold = "200ms"

max_trailing_logs = 250

server_stabilization_time = "10s"

enable_redundancy_zones = true

disable_upgrade_migration = false

enable_custom_upgrades = false

}

If you are using a non-default scheduler configuration(opens in new tab), ensure these settings are set before starting Nomad for the first time, or subsequently set via the API(opens in new tab).

Starting Nomad

Set up Nomad service

sudo nano /etc/systemd/system/nomad.service

[Unit]

Description=Nomad

Documentation=https://www.nomadproject.io/docs/

Wants=network-online.target

After=network-online.target

[Service]

# Nomad servers should be run as the nomad user.

# Nomad clients should be run as root

User=root

Group=root

ExecReload=/bin/kill -HUP $MAINPID

ExecStart=/usr/local/bin/nomad agent -config /etc/nomad.d

KillMode=process

KillSignal=SIGINT

LimitNOFILE=65536

LimitNPROC=infinity

Restart=on-failure

RestartSec=2

TasksMax=infinity

OOMScoreAdjust=-1000

[Install]

WantedBy=multi-user.target

Start the Nomad service

sudo systemctl enable nomad

sudo systemctl start nomad

Validate the cluster is bootstrapped and functioning

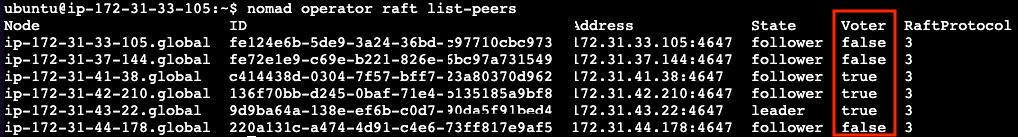

Ensure you have 3 of the members state Voter=False:

If you are running into issues with your cluster bootstrapping or the Nomad agent starting, you can view journal logs to reveal any errors:

sudo systemctl status nomad

sudo journalctl -xe

Typically issues are due to invalid configuration entries within nomad.hcl, certificate, or networking issues where the nodes cannot communicate with each other.

Initialize ACL system

To initialize the Access Control List (ACL) system, you need to bootstrap it. This step is crucial for setting up access control policies in your Nomad cluster. The bootstrap process generates an initial management token that allows you to configure ACLs.

Log into one of your servers and run the following commands:

Set environment variables

export NOMAD_ADDR=https://127.0.0.1:4646

export NOMAD_CACERT=/etc/nomad.d/tls/nomad-agent-ca.pem

export NOMAD_CLIENT_CERT=/etc/nomad.d/tls/global-server-nomad.pem

export NOMAD_CLIENT_KEY=/etc/nomad.d/tls/global-server-nomad-key.pem

Bootstrap the ACL system. This only needs to be done on one server. The ACLs sync across server nodes.

nomad acl bootstrap

Save the accessor ID and secret ID and follow the Identity and Access Management(opens in new tab) section of the Nomad Operating Guide for more information on ACLs and post installation tasks.

Interact with your Nomad cluster

export NOMAD_ADDR=https://nomad-load-balancer

export NOMAD_TOKEN=YourSecretID

export NOMAD_CACERT=./nomad-agent-ca.pem

export NOMAD_CLIENT_CERT=./global-cli-nomad.pem

export NOMAD_CLIENT_KEY=./global-cli-nomad-key.pem

nomad -autocomplete-install && complete -C /usr/bin/nomad nomad

Nomad clients

This section provides guidance on deploying and managing Nomad clients. Nomad clients are responsible for running tasks and jobs scheduled by the Nomad servers. They register with the server cluster, receive work assignments, and execute tasks.

Preparation

Many of the preparation steps for Nomad clients are similar to those for servers. Refer to the Platform-specific guidance(opens in new tab) section for details on the following:

- Creating security groups

- Setting up IAM roles

- Preparing for launching instances

Nomad client instance types

Organize these instance families within Nomad using node pools(opens in new tab), allowing for workload segregation and enabling job authors to target their workload to the most appropriate hardware resources.

Deployment process

Follow the steps in the preparation(opens in new tab) and installation process(opens in new tab) sections of the server deployment section, with a few key differences in configuration. Spread your clients across availability zones.

Client TLS configuration

Use a different certificate for Nomad clients than the server nodes. From the same directory as the CA certificate you generated during the server installation steps:

nomad tls cert create -clientThis generates the following.

global-client-nomad.pem(client certificate)global-client-nomad-key.pem(client private key)

Copy certificates to the client instances:

scp nomad-agent-ca.pem global-client-nomad.pem global-client-nomad-key.pem \

ubuntu@your-vm-ip:/etc/nomad.d/tls/

Remember to keep your private keys secure and implement proper certificate management practices, including regular rotation and secure distribution of certificates to your Nomad clients.

In your nomad.hcl file:

data_dir = "/opt/nomad/data"

bind_addr = "0.0.0.0" #CHANGE ME to listen only on the required IPs

client {

enabled = true

server_join {

retry_join = ["provider=aws tag_key=cloud-auto-join tag_value=true"]

}

}

tls {

http = true

rpc = true

ca_file = "/etc/nomad.d/tls/nomad-agent-ca.pem"

cert_file = "/etc/nomad.d/tls/global-client-nomad.pem"

key_file = "/etc/nomad.d/tls/global-client-nomad-key.pem"

}

#...other client configurations...

Post-installation tasks

After installation, perform these tasks to ensure everything is working as expected:

Verify cluster health:

nomad server members #lists all servers

nomad node status #lists all clients

For day two activities such as observability and backups, consult the Nomad Operating Guide(opens in new tab).