Detailed design

This section takes the recommended architecture from the above section and provides more detail on each component of the architecture. Carefully review this section to identify all technical and personnel requirements before moving on to implementation.

Servers

This section contains specific hardware capacity recommendations, network requirements, and additional infrastructure considerations.

Sizing

Every hosting environment is different, and every customer's Nomad usage profile is different. These recommendations should only serve as a starting point, from which each customer's operations staff may observe and adjust to meet the unique needs of each deployment. For more information, refer to the Requirements section(opens in new tab) of the documentation.

Sizing recommendations have been divided into two common cluster sizes: small, and large. Small clusters would be appropriate for most initial production deployments or for development and testing environments. Large clusters are production environments with a consistently high workload. That might be a large number of deployments, a large number of system or batch jobs, or a combination of the two.

| Size | CPU | Memory | Disk Capacity | Disk IO | Disk Throughput |

|---|---|---|---|---|---|

| Small | 2-4 cores | 8-16 GB RAM | 100+ GB | 3000+ IOPS | 75+ MB/s |

| Large | 8-16 cores | 32-64 GB RAM | 200+ GB | 10000+ IOPS | 250+ MB/s |

For a mapping of these requirements to specific instance types for each cloud provider, refer to the cloud-focused sections below.

Hardware considerations

In general, CPU and storage performance requirements will depend on the customer's exact usage profile (e.g., number of running jobs and deployment rate). Memory requirements depend on the number of running jobs and should be sized according to that.

Nomad servers should have a high-performance hard disk subsystem capable of up to 10000+ IOPS and 250+ MB/s disk throughput. Frequently enough Nomad servers need to flush the cluster state data to disk(opens in new tab). The use of slower storage systems will negatively impact performance.

Hashicorp strongly recommends configuring Nomad with audit logging enabled, as well as telemetry for diagnostic purposes. The configuration of these components is covered in Nomad Operating Guide. The impact of the additional storage I/O from audit logging will vary depending on your particular pattern of requests.

Networking

Nomad servers require interconnection between AZs and load balancers configured with TLS passthrough to connect operators to clusters.

Servers interconnectivity requires low latency (sub 10ms(opens in new tab)) to maintain the cluster quorum, as well as to efficiently route operators write requests from standby nodes to the active node. These nodes are also fronted by Layer 4 Load Balancers, which permit end-to-end TLS communication.

Servers Network rules must allow communication the following ports:

- API (4646/tcp) for operators to communicate with the cluster

- RPC (4647/tcp) for clients (compute nodes) to establish a long lasting connection to servers and receive jobs assignments

- Serf (4648/tcp and 4648/udp) between servers only to maintain the cluster quorum

Availability Zones

This Validated Design uses 3 availability zones, with 2 nodes per availability zone.

In order for cluster members to stay properly in sync, network latency between availability zones should be less than ten milliseconds (10 ms).

Network connectivity

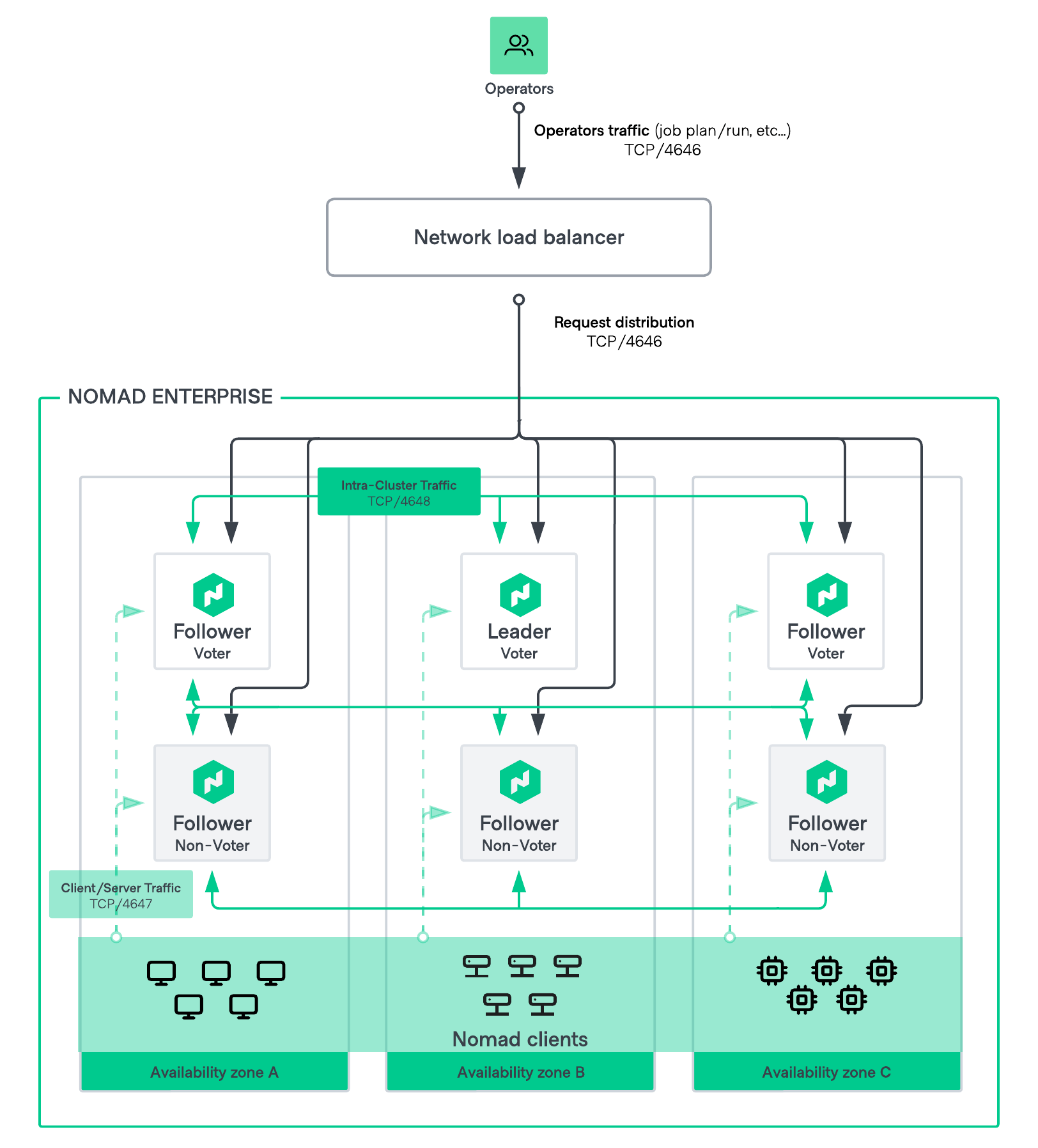

Figure 5: HVD Nomad networking diagram

Figure 5: HVD Nomad networking diagram

The following table outlines the minimum network connectivity requirements for Nomad cluster nodes. You may also need to grant the Nomad servers outbound access to additional services that live elsewhere, either within your own internal network or via the internet.

Examples may include:

- authentication with SSO(OIDC)

- NTP, for maintaining consistent time between nodes

- remote log handlers, such as a Splunk or ELK environment

- metrics collection, such as Prometheus; etc.

| Source | Destination | port | protocol | Direction | Purpose |

|---|---|---|---|---|---|

| Operators / administration | Load balancer | 4646 | tcp | incoming | Request distribution |

| Load balancer | Nomad servers | 4646 | tcp | incoming | Nomad API |

| Nomad clients | Nomad servers | 4647 | tcp | incoming | RPC communication between clients and servers |

| Nomad servers | Nomad servers | 4648 | tcp/udp | bidirectional | Cluster bootstrapping, gossip, replication, request forwarding |

| Nomad servers | External systems | various | various | various | External APIs |

Nomad generally should not be exposed to inbound traffic via a publicly-accessible endpoint. This is to minimize both the risk of exposing the API as well as the risk of denial-of-service attacks.

Wherever possible, access to the Nomad API should be limited to the networks used by Nomad operators.

Traffic Encryption

Every segment of Nomad-related network traffic should be encrypted in transit using TLS over HTTPS. This includes communication from clients to the Nomad servers, as well as communication between operators and Nomad control plane (servers).

You will need to create a standard X.509 public certificate from an existing trusted certificate authority internal to your organization. This certificate will represent the Nomad cluster, and will be served by each Nomad server in the cluster. Along with the certificate itself, you will also need to supply the certificate’s private key, as well as a bundle file from the certificate authority used to generate the certificate. The whole process is covered in the Enable TLS for Nomad tutorial(opens in new tab).

You should also encrypt Nomad's server-to-server communication, using gossip encryption as it is described in the tutorial(opens in new tab).

DNS

Internally, Nomad servers do not use DNS names to talk to other members of the cluster or to the clients, relying instead on IP addresses. However, you will need to configure a DNS record that resolves to the IP address hosting your load balancer configuration. This also will be the domain you will provision your TLS certificate for.

Load balancing

Use a TCP-based (layer 4) load balancer to distribute incoming requests across multiple Nomad servers within a cluster.

Configure the load balancer to listen on port 4646/tcp and forward requests to all Nomad servers in the cluster on port 4646/tcp.

All healthy nodes in the cluster should be able to receive requests in this way, including standby nodes (i.e., followers) and nodes configured as non-voters.

TLS passthrough

Configure the load balancer to use TLS passthrough, rather than terminating the TLS connection at the load balancer. This keeps the traffic end-to-end encrypted from the operators to the Nomad servers, removing even the load balancer as a possible attack vector.

Health check

Each Nomad server node provides a health check API endpoint at v1/agent/health(opens in new tab). HTTPS-based health checks provide a deeper check than simply checking the TCP port.

Configure your load balancer to perform a health check to all Nomad server nodes in a cluster by using the path v1/agent/health, with an expected status code of 200. This will ensure that the Nomad servers are healthy and able to receive requests.

Software

To deploy production Nomad, this Validated Design only requires the Nomad Enterprise software binaries to be installed on the Nomad servers and clients.

The Nomad server process should not be run as root.

Instead, a dedicated user should be created and used both to run the Nomad service as well as to protect the files used by and created by Nomad.

Nomad License

Your Nomad Enterprise license must be deployed(opens in new tab) on every Nomad server node in order for the Nomad process to start.

Configuration

Nomad configuration file is referenced each time the Nomad process is started.

The configuration file(opens in new tab) is made up of a number of different stanzas. Each stanza is a section of the configuration file that configures a different aspect of Nomad's behavior. You can start running Nomad with minimal configuration thanks to its safe defaults. However, depending on your specific environment, you may need to fine-tune the settings.

Operating systems

The Validated Design Terraform module uses Ubuntu 22.04, but the same principles would apply to any other modern Linux distro. There are also Nomad releases for Windows and macOS.

Node Pools

Using node pools(opens in new tab) in HashiCorp Nomad offers several advantages:

Isolation: Node pools allow you to isolate workloads by grouping nodes with similar characteristics or purposes. This can be useful for separating production and development environments, or for isolating workloads with different security or compliance requirements.

Resource Management: By grouping nodes into pools, you can better manage and allocate resources, especially specific ones such as GPUs. This ensures that specific workloads have access to the resources they need without interference from other jobs.

Affinity and Constraints: While affinities and constraints can express preferences for certain nodes, node pools provide a more robust way to ensure that allocations for a job are placed on the desired set of nodes. This helps in maintaining consistency and predictability in job placement.

Operational Efficiency: Node pools simplify the management of large clusters by allowing you to apply configurations and policies at the pool level rather than individually to each node. This reduces operational overhead and complexity.

Scalability: As your infrastructure grows, node pools make it easier to scale by adding or removing nodes from specific pools without disrupting the overall cluster.

Improved Scheduling: Node pools enhance the scheduler's ability to make informed decisions about where to place workloads, leading to better utilization of resources and improved performance.

Example Use Cases:

- Environment Separation: Use separate node pools for production, staging, and development environments to ensure isolation and prevent resource contention.

- Specialized Hardware: Create node pools for nodes with specialized hardware, such as GPUs, to ensure that only jobs requiring those resources are scheduled on those nodes.

- Compliance and Security: Use node pools to group nodes that meet specific compliance or security requirements, ensuring that sensitive workloads are only placed on compliant nodes.

By leveraging node pools, you can achieve greater control, efficiency, and flexibility in managing your Nomad cluster.

Multi-region federation

You should consider using federation with HashiCorp Nomad in the following scenarios:

Multi-Region Deployments: If your infrastructure spans multiple geographic regions, federation can help manage and orchestrate workloads across these regions, ensuring high availability and disaster recovery.

Workload Segmentation: When you need to segment workloads for different business units, teams, or environments (e.g., production, staging, development), federation allow you to manage these segments independently while still maintaining a unified control plane.

Resource Optimization: federation enable you to optimize resource utilization by sharing workloads across multiple datacenters or cloud providers, balancing the load and reducing costs.

Compliance and Security: If you have specific compliance or security requirements that necessitate isolating certain workloads or data, federation can help enforce these policies while still providing centralized management.

Scalability: As your organization grows, federation allow you to scale your Nomad infrastructure horizontally by adding new regions or datacenters without disrupting existing workloads.

Disaster Recovery: federation provide a robust disaster recovery strategy by allowing you to failover workloads to another region or datacenter in case of an outage. Caveats apply, consult the related Federation architecture(opens in new tab), operations(opens in new tab), and Failure scenarios(opens in new tab) documents that cover the topic in detail.

Example Use Cases:

- Global Applications: Deploy applications that need to be available globally, ensuring low latency and high availability by distributing workloads across multiple regions.

- Hybrid Cloud: Manage workloads across on-premises datacenters and multiple cloud providers, optimizing for cost and performance.

- Regulatory Compliance: Isolate workloads that handle sensitive data to specific regions or datacenters to comply with local regulations.

By leveraging federation, you can achieve greater resilience, flexibility, and efficiency in managing your Nomad infrastructure.