Secure proxy

Secure proxy is a Boundary worker capability that is responsible for proxying sessions between clients and targets.

Operating modes

The following sections describe the various operating modes, topology, and network connectivity requirements.

- Proxying target sessions

- Proxying Vault connections

- Proxying multi-hop sessions

The mode of operation classes Boundary workers per the following.

- Ingress worker

- Intermediary worker

- Egress worker

All workers use the same binary, and configuration determines their mode of operation. The access requirements determine which operating modes satisfy the topology. For example, proxying multi-hop sessions require a minimum of ingress and egress workers.

All operating modes and topologies apply to HCP Boundary and Boundary Enterprise.

HCP Boundary

Self-managed workers allow users to connect to private endpoints securely without routing traffic through HCP Boundary or HashiCorp-managed infrastructure.

For example, organizations with on-premise environments can deploy self-managed workers in their private networks to allow connectivity to those targets with HCP Boundary, often in a multi-hop topology with ingress and egress workers.

Self-managed workers use public key infrastructure (PKI) for authentication. They authenticate to Boundary using a certificate-based method that allows you to deploy workers without using a shared key management service (KMS).

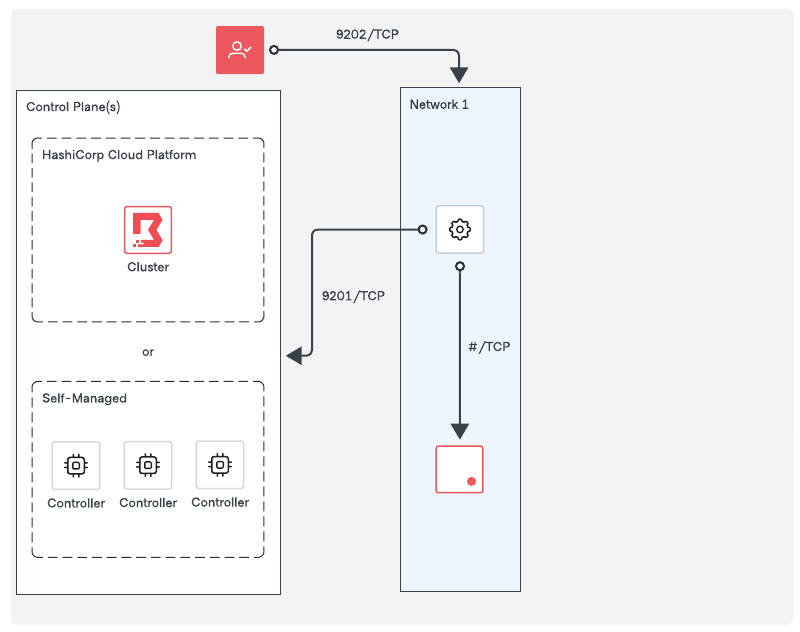

Proxying target sessions

Achieve proxying sessions between clients and targets with a single-layered approach, which is the minimal requirement for establishing sessions. This approach is a simplified topology - use it only in non-production scenarios such as testing or proof-of-concepts.

Network requirements

- Outbound connectivity (default port TCP-9201) to an existing trusted Boundary control point, for example a Boundary worker or the Boundary control plane, or in other words, the cluster URL.

- Outbound connectivity to the remote service port of the target.

- Inbound connectivity (default port TCP-9202) from clients establishing sessions to the targets

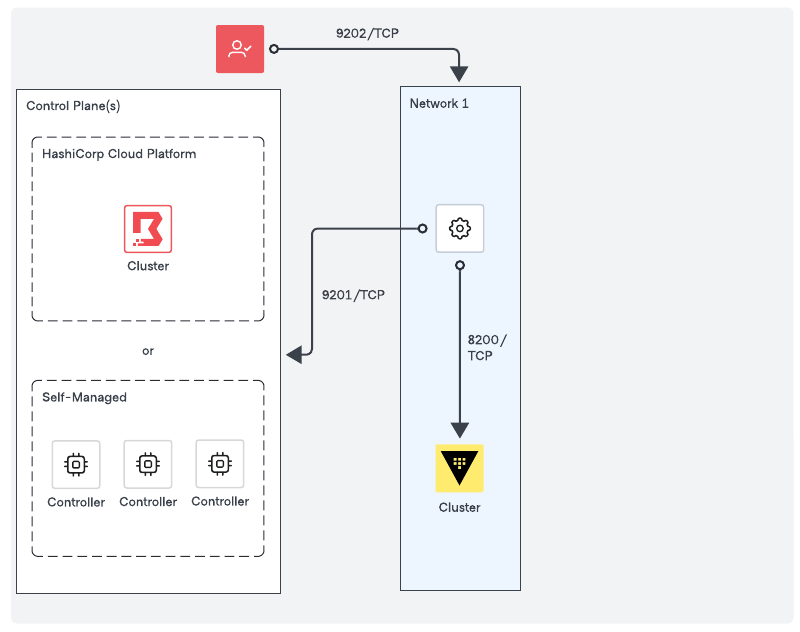

Proxying vault connections

Similar to Proxying target sessions, a worker can proxy connections to private Vault clusters to provide integration between Boundary and Vault.

Network requirements

- Outbound connectivity (default port TCP-9201) to an existing trusted Boundary control point, for example a Boundary worker or the Boundary control plane, or in other words, the cluster URL.

- Outbound connectivity (default port TCP-8200) to the Vault cluster.

High availability and sizing

Recommendations for high availability

Each network enclave that Boundary accesses should have at least one worker to provide connectivity. Deploying workers into each network enclave enables organizations to minimize and simplify the firewall/security requirements typically required to provide connectivity to targets.

We recommend at least three workers per network enclave to ensure high availability for production environments. Worker session assignment is intelligently dictated by the Boundary control plane based on the following:

- Which workers are candidates to proxy a session based on the worker's tags and the target's worker filter, and

- The health and connectivity of candidate workers, you do not need a load balancer to manage worker traffic.

The constraints of your access use case and the sensitivity of workloads in each network enclave dictate the redundancy and size you require for your workers.

Sizing guidance

We have divided sizing recommendations into two cluster sizes, and they represent an initial starting point based on the use case(s).

- Small clusters are appropriate for most initial production deployments or non-production environments.

- Large clusters are production environments with a large number of Boundary clients.

We recommend monitoring your cloud providers' network throughput limitations continuously for your machine types as you use Boundary and observe relevant metrics where possible, in addition to other host metrics, so that you can scale Boundary horizontally or vertically as needed.

| Provider | Size | Instance/VM Type |

|---|---|---|

| AWS | Small | m5.large, m5.xlarge |

| Large | m5n.2xlarge, m5n.4xlarge | |

| Azure | Small | Standard_D2s_v3, Standard_D4s_v3 |

| Large | Standard_D8s_v3, Standard_D16s_v3 | |

| GCP | Small | n2-standard-2, n2-standard-4 |

| Large | n2-standard-8, n2-standard-16 |

Additional documentation

- AWS: EC2 Network Performance and Monitoring EC2 Network Performance

- Azure: Azure Virtual Machine Throughput and Accelerated Network for Azure VMs

- GCP: Network Bandwidth and About Machine Families

Worker performance

Performance is most affected by the number of concurrent sessions the worker is proxying and the data transfer rates within those sessions. The size of workers is dependent on how you use Boundary, for example, if you use SSH connections and HTTP access, your instance selection and performance differ from a large data transfer use case.

Organizations should monitor worker performance using the appropriate telemetry platform. In addition to the typical OS metrics (for example CPU, Memory, Disk, Network), Capture Boundary-specific worker metrics to provide better insight into performance, and enable Boundary administrators to make decisions about scaling.