Dynamic secrets

Dynamic secrets are a powerful feature in Vault that allows for the generation of temporary, lease-based credentials for various services and systems. These credentials are created on-demand and have a limited lifespan, reducing the risk of credential exposure and misuse.

Dynamic secrets provide several benefits, including improved security and centralized credential management, auditing, and rotation processes. By leveraging dynamic secrets, organizations can enhance their security posture and reduce the risk of credential mismanagement.

Dynamic secrets overview

The process of generating and consuming dynamic secrets involves the following steps:

- Configure Vault: Vault needs to be configured with the appropriate secrets engine (e.g., AWS, Azure, database, etc.) and the necessary authentication and authorization mechanisms.

- Request dynamic credentials: An application or service requests dynamic credentials from Vault, specifying the desired service and any additional parameters (e.g., database name, user permissions).

- Generate and lease credentials: Vault generates temporary credentials for the requested service and associates them with a lease. The lease determines the lifetime of the credentials.

- Use credentials: The application or service can use the dynamic credentials to authenticate and access the requested service or system for as long as the lease is active

- Renew or revoke: Before the lease expires, the application can renew the credentials to extend their lifetime. Alternatively, the credentials can be revoked manually or will be automatically revoked when the lease expires.

HashiCorp Vault includes a large ecosystem of plugins to support identity and access integrations across a wide variety of external services:

- Cloud credentials - AWS, Azure, GCP

- Database credentials - Postgres, MySQL, Oracle, MongoDB, RabbitMQ etc

- Certificates - PKI

- API credentials - Kubernetes, Nomad, Consul, Terraform Cloud, etc

- Identity management** **- SSH, LDAP, Kerberos, Okta, GitHub, etc

- Ecosystem credentials - Artifactory, Venafi, 3rd party plugins

A list of Vault integrations can be found here: https://developer.hashicorp.com/vault/integrations(opens in new tab).

Architecture of dynamic secrets

We recommend prioritizing dynamic secrets adoption in a phased approach::

- Cloud credentials in HCP Terraform: If you use HCP Terraform to provision infrastructure, leverage Vault’s dynamic provider credentials.

- Cloud credentials in jobs, CI/CD pipelines: If you use cloud credentials embedded in jobs or CI/CD pipelines, transition to using dynamic cloud credentials.

- DB credentials in jobs, CI/CD pipelines: If you use static DB credentials in jobs or CI/CD pipelines, we recommend converting them to dynamic DB credentials.

- DB credentials in applications: Convert applications accessing sensitive databases to leverage dynamic credentials. Leverage the Vault Agent for an application that is not Vault-aware.

- PKI: Drive certificate signing and renewals.

- Third-party integrations: Where needed

Recommendation on TTL

Dangling leases should be avoided, due to memory consumption involved in lease management and cleanup. Tune your TTLs. Start with a generous TTL value (24-48 hrs) and fine-tune based on the application requirements and lifecycle.

Ensure batch and pipeline jobs’ TTL threshold exceeds the maximum potential of long-running jobs.

Short-lived applications should have short-lived TTLs. Long-lived leases for short-lived applications can have negative memory consequences for Vault.

Network considerations

For dynamic secrets to be implemented, Vault needs a network path to the resource being managed.

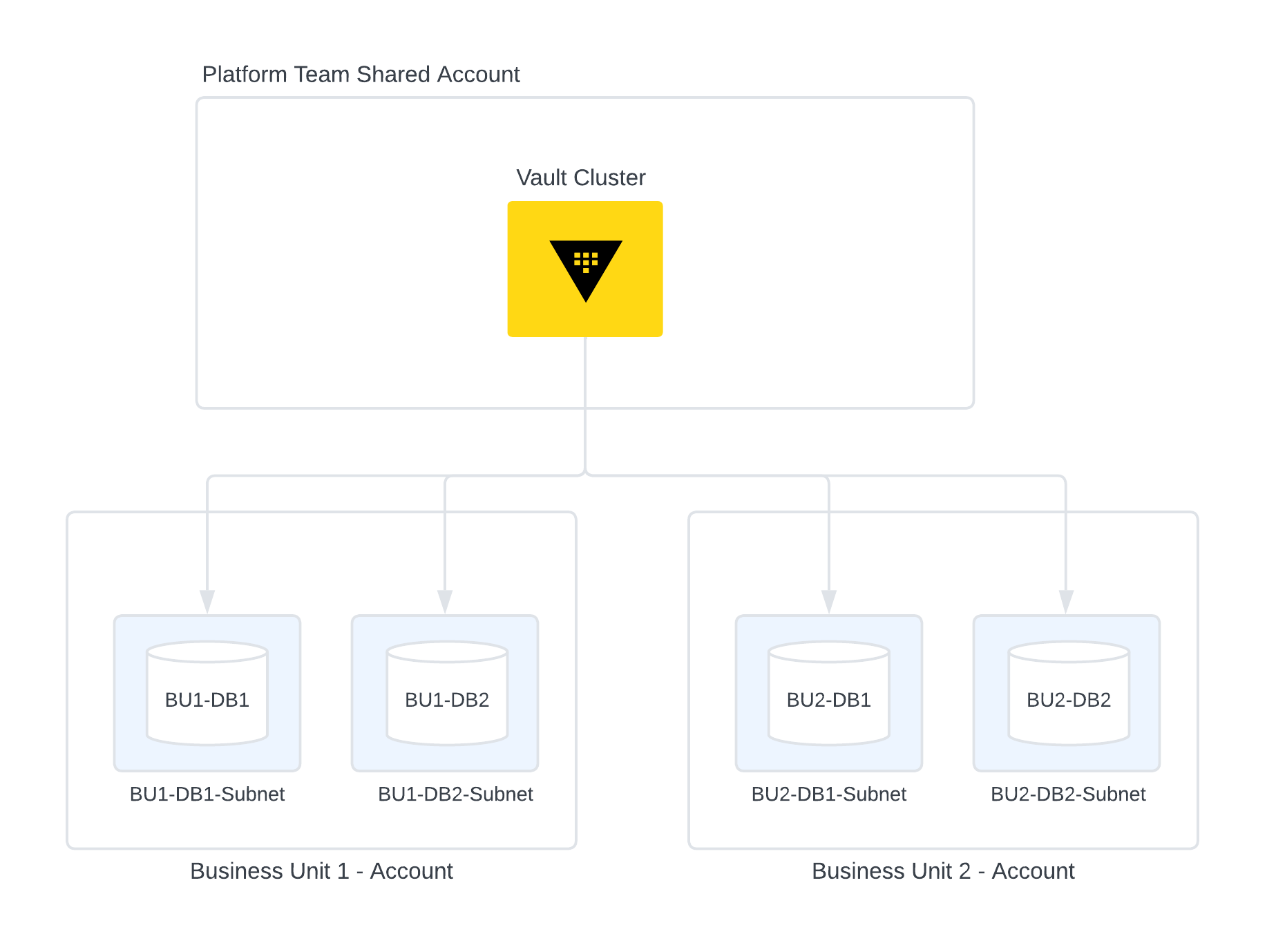

In the example below, the Vault cluster is in a shared cloud account managed by the platform team. In this scenario each business unit leveraging Dynamic Credentials for Databases operates a separate account with databases in their own isolated DB subnets. For adoption of Vault Dynamic DB Credentials, the following networking topology where vault has access to each of the db subnets where will be required.

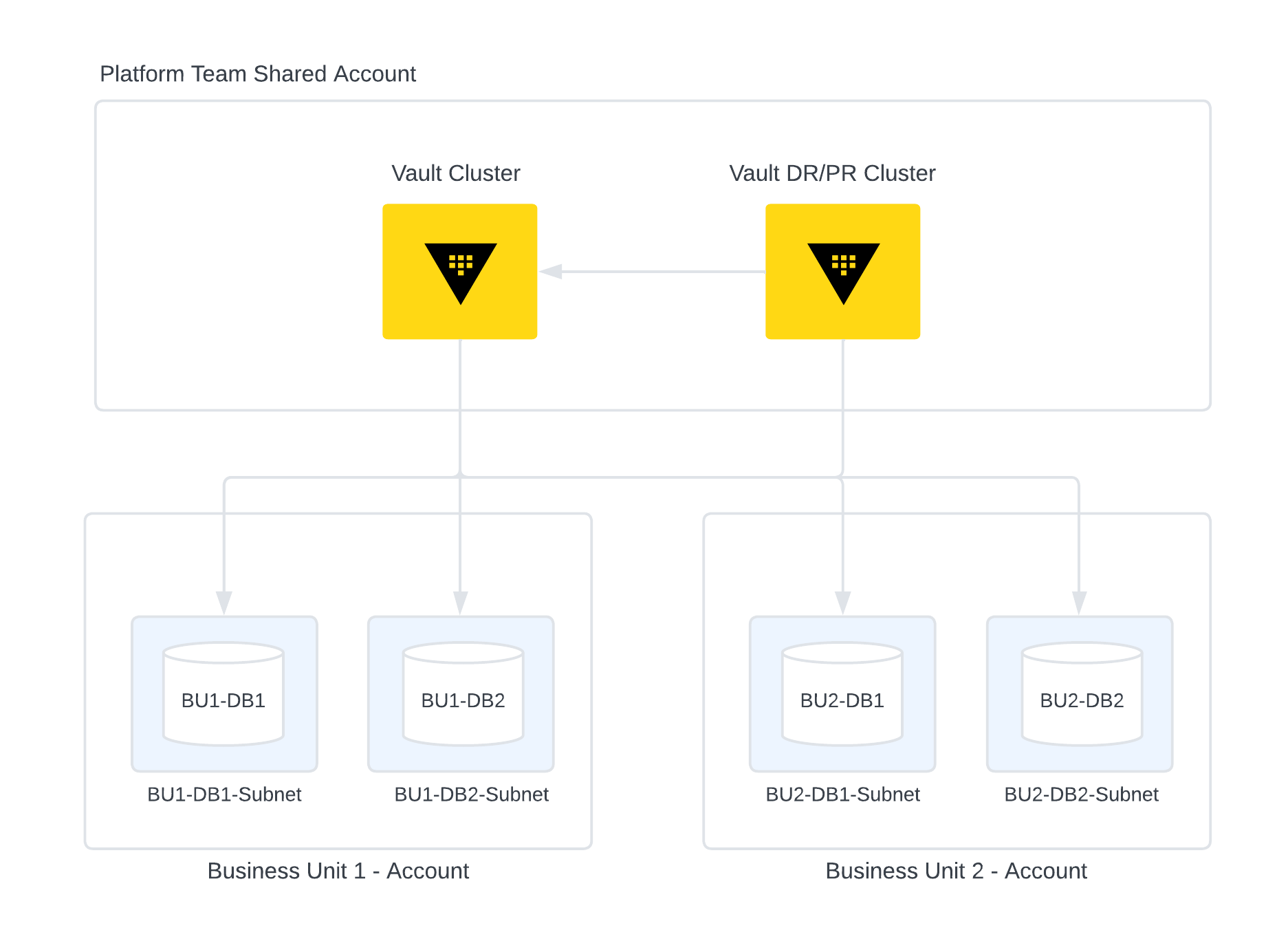

When a Vault DR/PR cluster is available, ensure that the secondary clusters have access to the tenant databases as well. This will ensure that in case of a failover, the DR/PR cluster is able to manage dynamic secrets database accounts.

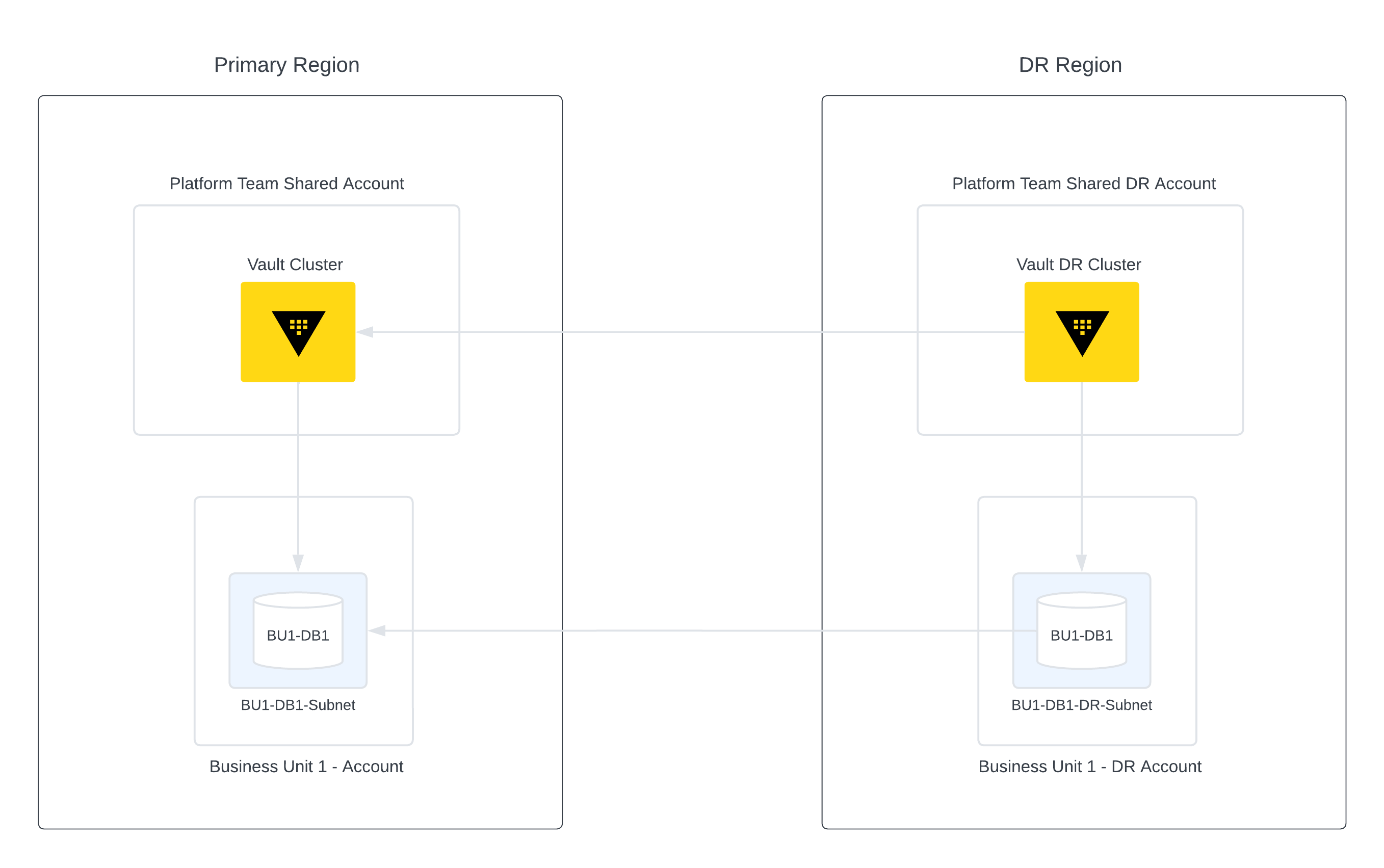

In case of region failure and the DR Vault cluster and the DR DB is promoted - please note that due to the way the DB mount is configured, the Vault DR cluster may not have a way to access the DR DB. We recommend that a separate DB mount is created for DR purposes. This also assumes that the application is configured to access the DR DB mount and has the ability to request new leases.

The connection parameter in the database secrets engine config is the key to reaching the database from Vault. By using the same client-facing FQDN or IP address for all failover replicas of your database, you can avoid configuring multiple mounts for database failover. Configure your database replication with Virtual IP addresses (VIPs), global load balancer, or DNS with failover in order to ensure access across multiple regions of Vault clusters.

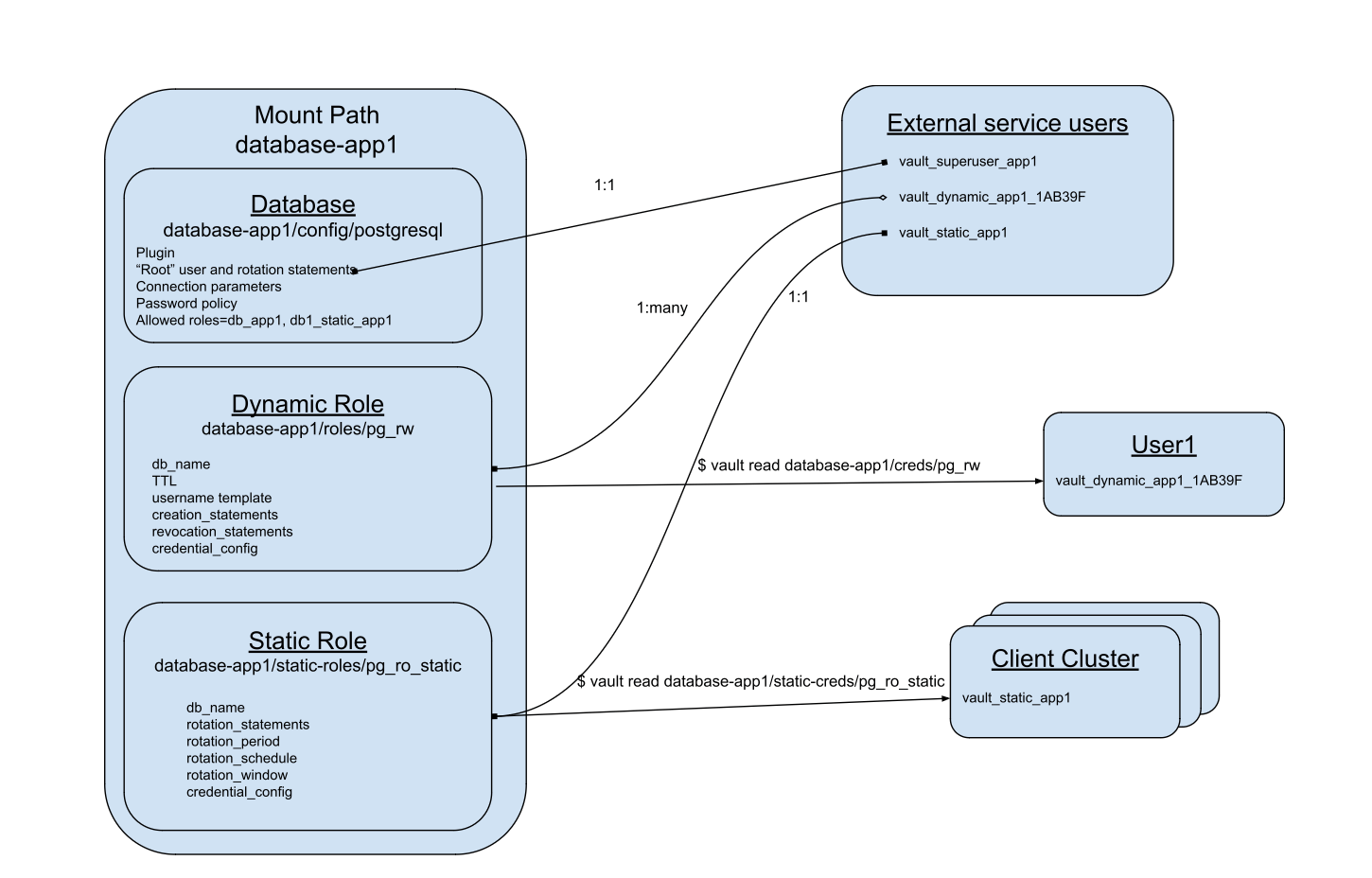

Dynamic secrets engine path

The location or mount point at which a dynamic secrets engine is activated determines specific capabilities and restrictions that impact the organization of your dynamic credentials. This includes root credential management, connection parameters, and credential roles for Vault to manage.

“Root” user credential and rotation

The “root” user in this context is a superuser service account created in an external service exclusively for Vault to manage roles. This account is then configured as the “root” user in one dynamic secrets engine in Vault.

For an AWS secrets engine, this would be an IAM user and its access key. For a PostgreSQL database secrets engine, this would be a database superuser and password. The endpoint rotate-root is used to manually rotate the credential of this root user. After Vault rotates the credential, there is no way to retrieve it.

There must be a 1:1 relationship between “root” users and dynamic secrets configurations in Vault. Once added to Vault, this “root” user must not be used or managed outside of Vault, and must not be used for more than one dynamic secrets engine within Vault. For the same reason, do not use the same service account for a “root” user in a secrets engine and the “root” user in an auth method.

The “root” user should have only the permissions it needs to create service accounts with their further-reduced scope. These “root” user permissions are managed in the external service. For the AWS secrets engine, the access would be defined by an AWS policy and role applied to the IAM “root” user. For the PostgreSQL database secrets engine the access would be defined by the SQL statements used to create a PostgreSQL role in the PostgreSQL database server.

Define the appropriate RBAC and account creation statements for your root and dynamic users with the help of your identity and access team and a cloud platform SME or database SME in your organization. For some examples related to specific databases, see the section of this document titled Database account creation examples(opens in new tab).

Dynamic secrets engine roles

Role configurations are nested under a dynamic secrets engine mount path. The parameters for a role depend on the type of dynamic credential. There are two types of roles within most dynamic secrets engines. Dynamic roles are used to manage the full lifecycle of credentials in external systems. Static roles are used to manage and rotate only the secret portion of a persistent credential.

Dynamic roles are created and destroyed by Vault. A dynamic secret role configuration defines the parameters for the role, how credentials under that role are created, and the length of time a credential will be valid.

A static role configuration specifies an external credential under management, including the timing and method of rotation of its secret. Static credentials are created in the external system first and then added to Vault using an initial credential which is rotated under management. \

All built-in database secrets engine plugins support both dynamic and static roles. LDAP, AWS, and GCP secrets plugins also provide static roles. The Azure secrets engine has the option to use existing service principals, although that plugin calls them application_object_id.

For more information about database dynamic roles, see section on "Database dynamic roles" in this document.

Database secrets engine

Config

The config/:name endpoint of a database secrets engine includes settings to configure each database connection and type. These parameters are defined by the database plugin being used, so they may differ between various databases. The name used here must be re-used by the roles that leverage this connection.

For more information, see the server and API documentation: https://developer.hashicorp.com/vault/docs/secrets/databases(opens in new tab) and

https://developer.hashicorp.com/vault/api-docs/secret/databases#configure-connection(opens in new tab)Example configuration

$ export POSTGRES_URL=127.0.0.1:5432

$ vault write database-app1/config/postgresql \

plugin_name=postgresql-database-plugin \

allowed_roles=pg-ro,pg-rw,pg-static \

connection_url="postgresql://{{username}}:{{password}}@$POSTGRES_URL/demoapp?sslmode=disable" \

username=db_admin_vault \

password=insecure_password

Success! Data written to: database-app1/config/postgresql

Root credential rotation

To secure Vault’s “root” credential after it is configured, Vault provides an endpoint to rotate the credential. This will replace the manually-entered credential with a credential that only this Vault configuration knows. For this reason, do not use the same “root” credential in more than one location, even within the same Vault cluster. Immediate root credential rotation reduces risk of someone else accessing and using that credential.

$ vault write -force database-app1/rotate-root/postgresql

Considerations for database secrets engine organization

You can configure multiple database plugins per mount point, by writing different configs for different database plugins, with distinct connection parameters. If you have multiple database types configured under one mount path, use the allowed_roles parameter to restrict which plugin is used by each role.

Configuring multiple database types and connections under one mount point has some pros and cons. Doing so can consolidate configuration of Vault policies for an app that happens to use multiple database connections. However, providing multiple database connections under one mount point could lead to inadvertent mismanagement, such as revoking credentials across multiple databases accidentally, or requesting the wrong credential altogether. It also could add forensic complexity during an audit.

Configuring a secrets engine path per application enables management of one application's secrets separately from other applications’ secrets, isolating policy management, root credentials, and credential lifecycle management.

For these reasons, it is generally a best practice to create one secrets engine mount point per database, per application.

Database Roles

Roles define the credential generation of the database secrets engine. You can have multiple roles per database configuration. For example, you may want both read-only and read-write roles for a PostgreSQL server. The same Vault “root” user will require appropriate capabilities in the database to manage all roles that use the same database configuration.

Database roles have creation_statements which define what SQL statements will be executed by Vault when it connects to the database to create a role.

Username customization is a feature to improve visibility within the database. For example, you can prepend roles with ‘vault_managed’ to make it more clear to database engineers which roles are managed exclusively via Vault. This can help prevent accidental deletion of Vault’s managed accounts.

Configurable password policies enable you to meet your organization’s complexity requirements for compliance. Password policies can be defined at the database configuration level, and the role level, enabling the ability to inherit or override the password policy for each database role.

Create a dynamic database role

First, you need to define the creation statements for your role. The creation statements should be determined in conjunction with your database administration, security, and IAM teams as appropriate for your organization. The database team may provide the statements to you in a file. This example writes statements to a local file, defining broad read-only access:

$ tee readonly.sql <<EOF

CREATE ROLE "{{name}}" WITH LOGIN PASSWORD '{{password}}' VALID UNTIL '{{expiration}}';

COMMENT on ROLE "{{name}}" IS 'Role managed by Vault';

GRANT CONNECT ON DATABASE demoapp TO "{{name}}";

GRANT SELECT ON ALL TABLES IN SCHEMA public TO "{{name}}";

EOF

The next command creates the role in Vault, using the statements from a file. The db_name value must match the name of the database secrets engine object created earlier (not the database hostname). In this example, it is postgresql.

$ vault write database-app1/roles/pg-ro \

db_name=postgresql \

creation_statements=@readonly.sql \

default_ttl=1h max_ttl=24h

Success! Data written to: database-app1/roles/pg-ro

View the dynamic database role

You can read the same role endpoint to view the role configuration and SQL statements.

$ vault read database-app1/roles/pg-ro

Key Value

--- -----

creation_statements [CREATE ROLE "{{name}}" WITH LOGIN PASSWORD '{{password}}' VALID UNTIL '{{expiration}}';

COMMENT on ROLE "{{name}}" IS 'Role managed by Vault';

GRANT CONNECT ON DATABASE demoapp TO "{{name}}";

GRANT SELECT ON ALL TABLES IN SCHEMA public TO "{{name}}";]

credential_type password

db_name postgresql

default_ttl 1h

max_ttl 24h

renew_statements []

revocation_statements []

rollback_statements []

Generate a dynamic database credential

To create a new dynamic credential, you need to perform a read against the credential endpoint for the role.

$ vault read database-app1/creds/pg-ro

Key Value

--- -----

lease_id database-app1/creds/pg-ro/6XNimuvRkRgQqMoKHUdXigII

lease_duration 1h

lease_renewable true

password -upnMCdrAyBdl0MwRjcS

username v-root-pg-ro-55zd1HKtF4qzsqQiEklO-1712362373

List leases and investigate a lease generated by a database role

Note that the lease_id from the above command matches the output in this command. In this example, there is only one active lease:

$ vault list sys/leases/lookup/database-app1/creds/pg-ro

Keys

----

6XNimuvRkRgQqMoKHUdXigII

$ vault lease lookup database-app1/creds/pg-ro/6XNimuvRkRgQqMoKHUdXigII

Key Value

--- -----

expire_time 2024-04-05T20:12:53.168521-05:00

id database-app1/creds/pg-ro/6XNimuvRkRgQqMoKHUdXigII

issue_time 2024-04-05T19:12:53.168518-05:00

last_renewal <nil>

renewable true

ttl 50m47s

Revoke leases

The following command will revoke one lease, deleting its related database credential:

$ vault lease revoke database-app1/creds/pg-ro/6XNimuvRkRgQqMoKHUdXigII

By comparison, this command will revoke all leases under the pg-ro role (and delete all related database credentials):

$ vault lease revoke -prefix database-app1/creds/pg-ro

Even more destructively, the following command deletes all credentials for all dynamic roles under the database-app1 mount path:

$ vault lease revoke -prefix database-app1/creds/

Static Roles

Static roles are used to rotate the secret password component of a database login credential, while maintaining the same username portion. Using static roles enables Vault operators to rotate passwords without needing to create and delete users from the database. This can be important for performance at scale, since creating and deleting users can cause table locks impacting database performance. Static roles can be rotated based on a cron-like schedule, and limited to a window of time.

Configure a database static role

The static database role must already exist in the remote database. Vault will immediately rotate the password when the role is configured.

The Vault “root” user must have RBAC that allows it to ALTER the static role to change the password. Then, set up a file with rotation statements:

$ tee rotation.sql <<EOF

ALTER USER "{{name}}" WITH PASSWORD '{{password}}';

EOF

Then the static role can be connected to Vault:

$ vault write database-app1/static-roles/pg-static \

db_name=postgresql \

rotation_statements=@rotation.sql \

username="vault_static_app1" \

rotation_period=86400

Read the static role’s password, which will stay the same until it is rotated:

$ vault read database-app1/static-creds/pg-static

Key Value

--- -----

last_vault_rotation 2024-04-05T20:07:45.462742-05:00

password B8uk8rmn6cFiIvOZ-trN

rotation_period 24h

ttl 23h59m47s

username vault_static_app1

Other database types

AWS DynamoDB - AWS Secrets Engine

While DynamoDB is not supported by the database secrets engine, you can use the AWS secrets engine(opens in new tab) to provision dynamic credentials capable of accessing DynamoDB.

More information can be found here: https://developer.hashicorp.com/vault/docs/secrets/databases#aws-dynamodb(opens in new tab)

IBM DB2

Access to Db2 is managed by facilities that reside outside the Db2 database system. By default, user authentication is completed by a security facility that relies on operating system based authentication of users and passwords. This means that the lifecycle of user identities in Db2 aren't capable of being managed using SQL statements, which creates an operational challenge for credential lifecycle management.

To provide flexibility in accommodating authentication needs, Db2 ships with authentication plugin modules for LDAP.

More information about using Vault to manage DB2 credentials can be found here: https://developer.hashicorp.com/vault/tutorials/db-credentials/ibm-db2-openldap(opens in new tab)

Application considerations for dynamic credentials

Applications will need to be aware of password rotation in certain cases, especially for long-running jobs and applications.

For example, in the case of Spring Boot applications, you would do the following:

- Configure Spring Cloud Vault: Set up Spring Cloud Vault in your application.properties or application.yml file, specifying the necessary configurations to connect to Vault and access the dynamic secrets.

- Enable refresh scope: For any beans that should be refreshed when the configuration changes, use the @RefreshScope annotation. This might include your DataSource bean or any configuration properties class that holds your database credentials.

- Enable Actuator refresh endpoint: Make sure the Actuator's refresh endpoint is enabled and exposed. In Spring Boot 2.x, this might involve setting properties in your application.properties or application.yml, like \ management.endpoints.web.exposure.include: refresh

- Create a refresh mechanism: Implement a mechanism to call the /actuator/refresh endpoint whenever the credentials in Vault are rotated. This could be a scheduled task within your application, an external script, or a webhook triggered by Vault (if Vault is configured to send notifications on credential rotation).

- Secure the Actuator endpoints: Ensure that your Actuator endpoints, including the /refresh endpoint, are secured to prevent unauthorized access. Use Spring Security to restrict access to these endpoints.

- Refresh DataSource credentials: Ensure your DataSource or the component that manages the database connection is designed to handle configuration changes dynamically. This might involve using a DataSource proxy or custom logic to reinitialize the DataSource with new credentials upon refresh. Here is a simplified example demonstrating how you might configure a DataSource bean to use dynamic credentials that can be refreshed via Actuator:

@Configuration

public class DataSourceConfig {

@Bean

@RefreshScope

public DataSource dataSource(

@Value("${spring.datasource.url}") String url,

@Value("${spring.datasource.username}") String username, @Value("${spring.datasource.password}") String password) {

return DataSourceBuilder.create()

.url(url)

.username(username)

.password(password)

.build();

}

}