Services along with Consul client agents on VMs

Organizations may run hundreds of services across thousands of instances. Managing such a dynamic environment requires automation. HashiCorp recommends an immutable infrastructure approach integrated with CI/CD tools.

Immutable infrastructure approach

This immutable infrastructure approach is based on the following structure:

- Use an application image: All application dependencies are baked into this image. These dependencies can include the latest approved version of the OS (includes latest security patches); the application binaries & configuration files; the Consul agent binary & its configuration files; the application registration; health check configurations; security agents; scanners; logging; etc.

- Make use of a configuration template: Uses the above image and other configurations to create a template that can be used by the compute nodes (VMs). In the case of AWS, you would have a launch template.

- Use a scaling group: Utilizes the above configuration template to spin up compute nodes, as per its configuration. In AWS, you would use autoscaling groups.

As new versions of the application are available, the configuration templates can be updated to use the latest images via CI pipelines. Additionally, new versions of applications can be deployed by refreshing the scale groups via a CD pipeline.

Image management

HashiCorp recommends HCP Packer to automate the creation and management of images. Using the OS golden image as the source, the application image is created using Packer. This image includes:

- IT/Security-approved OS (from the golden image),

- Application binaries and configuration files,

- Consul agent binaries and configuration files,

- Application service registration, and

- Health-check configurations.

CI/CD implementation

To demonstrate a CI/CD implementation, you will deploy an example service (app-1). The following tools will be used:

| Service | Actor |

|---|---|

| Cloud provider | AWS |

| Git repository | GitHub |

| Image builder | Packer |

| Image artifact registry | HCP Packer |

| Image registry | AWS AMI |

| Infrastructure code | HCP Terraform |

| CI/CD | GitHub Actions, Vault GitHub Actions |

| GitHub runner | Self-hosted on AWS EC2 |

| Secrets manager | HashiCorp Vault |

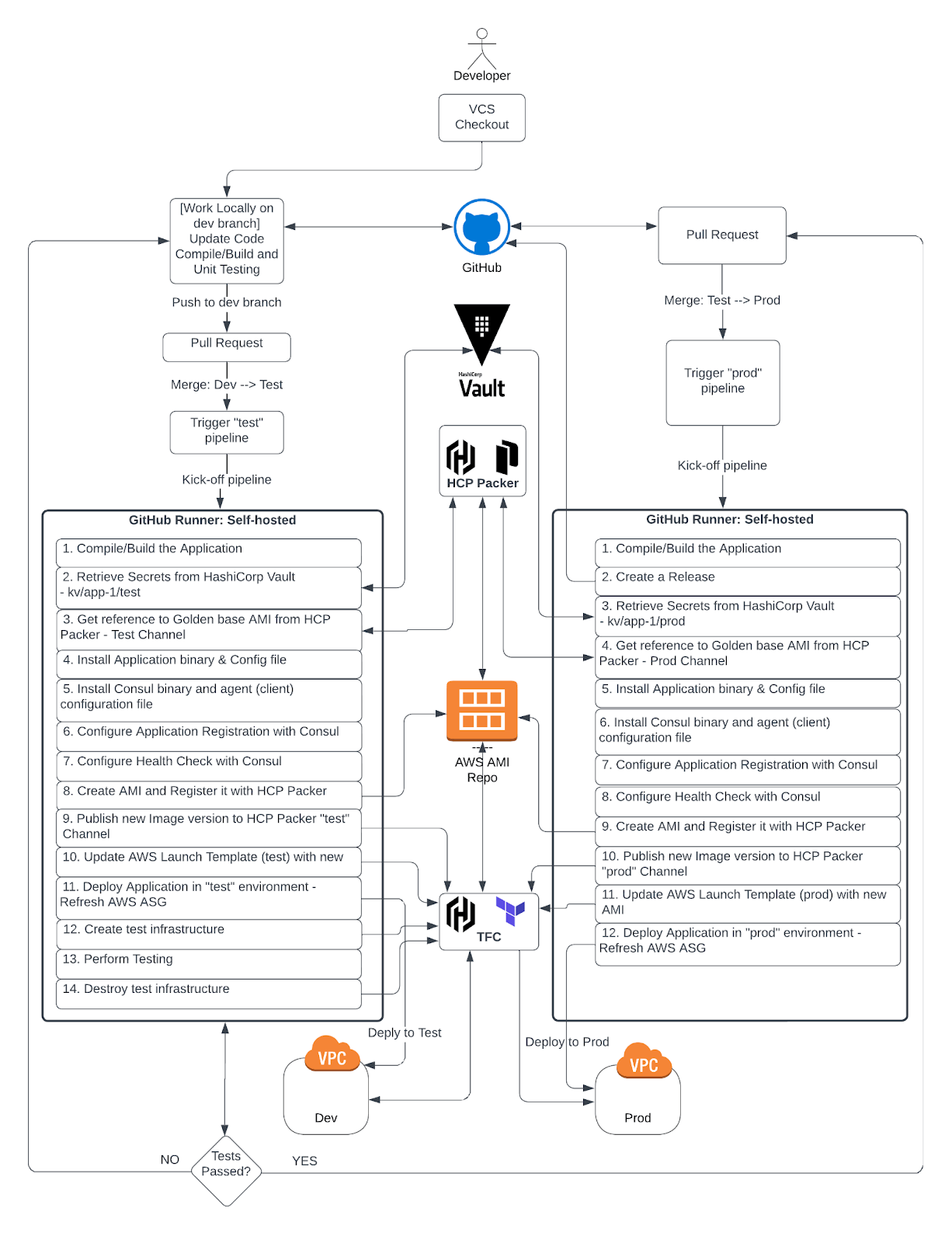

The diagram presents a high-level overview of the CI/CD workflow:

CI/CD workflow

The next set of steps and code snippets illustrate how to implement the workflow illustrated in the diagram above.

Checkout a "dev" branch

Developers should utilize a version-controlled repository like those found on GitHub to store application code. Code responsible for introducing new features, enhancements, bug fixes, etc... should always start on a "dev" branch. Use a local IDE to compile and test code, then push to the remote "dev" branch once the code is complete.

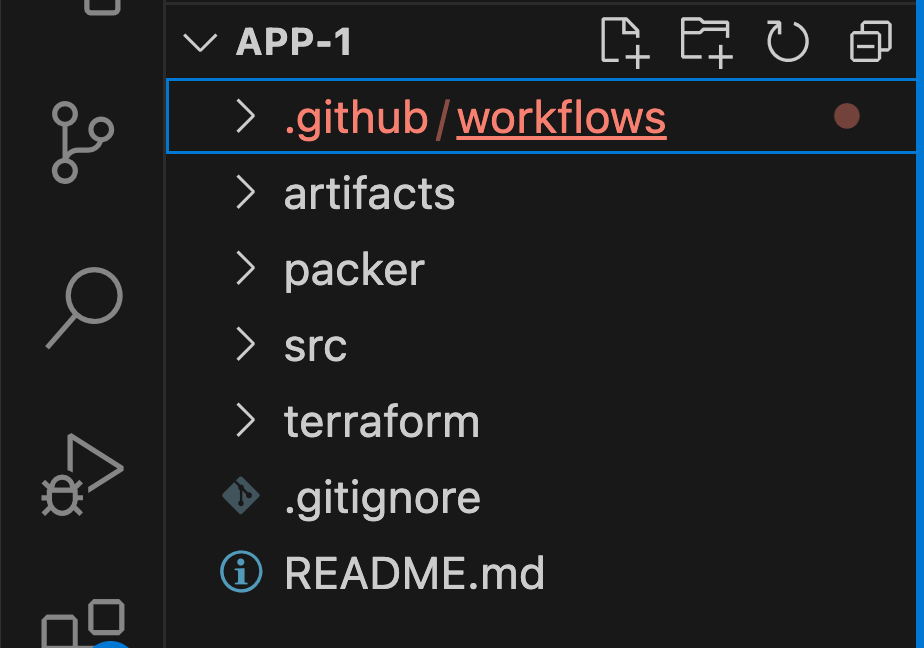

The structure of your code directory should be a series of folders containing appropriately separated files:

.github/workflowscontains code pertinent to the CI/CD workflows.artifacts/contains the compiled application binaries.packer/contains the Packer configuration that creates the images and publishes to the HCP Packer artifact registry.src/holds the application source code.terraform/is responsible for the terraform configuration files that create/update the template, along with any infrastructure for the application test environment.

Pull request process

The developer responsible for the new feature creates a pull request for code review. When the code is approved, merge the "dev" branch into a "test" branch. When the code is merged, the CI/CD workflow should be kicked off automatically.

CI pipeline kickoff

Compile/build the application

Build the application from the "test" branch. Save the application binaries and configuration file as a zip folder (

app-1.zip) in theartifacts/directory..github/workflows/app-1.yamlname: app-1 workflow permissions: contents: write id-token: write on: pull_request: types: [ closed ] branches: [ test, main ] ... ... compile_and_build_app: if: github.event.pull_request.merged == true runs-on: self-hosted steps: - name: Checkout uses: actions/checkout@v4 with: ref: 'refs/heads/${{ env.branch }}' - name: build run: | cd src make ${{ vars.APP_NAME }} ${{ env.branch }} ... ...Retrieve secrets from HashiCorp Vault

In this example, all secrets in Vault's KV secrets engine path

kv/data/app-1/testare automatically saved as environment variables for the GitHub Actions. Secrets includeHCP_CLIENT_ID,HCP_CLIENT_SECRET, andTF_API_TOKEN.Refer to the "Vault as secrets management for Consul" tutorial for steps on creating and storing a Vault token.

.github/workflows/app-1.yaml... ... - name: Retrieve Secrets from HashiCorp Vault id: vault uses: hashicorp/vault-action@v2 with: url: ${{ env.vault_url }} tlsSkipVerify: true token: ${{ secrets.VAULT_TOKEN }} secrets: | ${{ env.vault_secrets_path }}/data/app-1/${{ env.branch }} * ; ... ...Get the golden OS image from HCP Packer

Extract the AMI ID from the golden OS image and use it as the source image to create a new application image.

packer/app-1.pkr.hcl... ... // Get image metadata from HCP Packer to use as the source image to create the new AMI data "hcp-packer-artifact" "source-image" { bucket_name = var.packer_source_image_bucket platform = var.packer_source_image_platform region = var.region version_fingerprint = "${data.hcp-packer-version.consul-client-source.fingerprint}" } data "hcp-packer-version" "consul-client-source" { bucket_name = var.packer_source_image_bucket channel_name = var.env } source "amazon-ebs" "amazon-linux" { ami_name = "${var.env}-${var.service_name}-${var.service_version}" instance_type = var.instance_type region = var.region source_ami = data.hcp-packer-artifact.source-image.external_identifier ssh_username = var.ssh_username } ... ...Install the application binary and config file

Run the Packer command with the branch name "test" passed in as the

env.branchvariable:.github/workflows/app-1.yaml... ... - name: packer run: | cd packer packer init . packer build -var "env=${{ env.branch }}" . ... ...The application zip file is copied from the

artifacts/directory to the application image and extracted into the appropriate directory.packer/app-1.pkr.hcl... ... build { ... ... // Upload Application binary sources = ["source.amazon-ebs.amazon-linux"] provisioner "file" { source = "../artifacts/${var.env}/latest/${var.service_name}.zip" destination = "~/${var.service_name}.zip" } // Install application provisioner "shell" { inline = [ "sudo unzip ${var.service_name}.zip", "sudo chmod a+x ${var.service_name}", "sudo cp ${var.service_name} /usr/bin/.", "sudo cp ${var.service_name}.service /etc/systemd/system/.", "sudo systemctl enable ${var.service_name}" ] } ... ...Install the Consul binary and agent configuration file

Install Consul agent via the

install-consul.shscript, and place the Consul config template file in the/etc/consul.d/consul.hcl.tpldirectory.packer/app-1.pkr.hcl... ... // Install Consul Client provisioner "shell" { environment_vars = [ "CONSUL_VERSION=${var.consul_version}" ] script = "./config/scripts/install-consul.sh" } // Upload Consul config file provisioner "file" { source = "./config/templates/consul-client.hcl.tpl" destination = "/tmp/consul-client.hcl.tpl" } // Copy Consul Client config file provisioner "shell" { inline = [ "sudo -u consul bash -c 'cp /tmp/consul-client.hcl.tpl /etc/consul.d/consul.hcl.tpl'" ] } ... ...Use the provided bach script or other configuration management tool of your choosing to install the Consul binaries.

packer/config/scripts/install-consul.sh#!/bin/bash $ sudo yum -y update $ sudo yum install -y yum-utils shadow-utils $ sudo yum-config-manager --add-repo https://rpm.releases.hashicorp.com/AmazonLinux/hashicorp.repo $ sudo yum -y install consul-${CONSUL_VERSION}packer/config/templates/consul-client.hcl.tmpl# datacenter datacenter = "${CONSUL_DC}" # data_dir data_dir = "/opt/consul" # server server=false # Bind addr bind_addr = "{{ GetPrivateInterfaces | include \"network\" \"10.0.0.0/8\" | attr \"address\" }}" encrypt = "${GOSSIP_KEY}" # ACL acl = { enabled = true default_policy = "deny" enable_token_persistence = true tokens = { agent = "${AGENT_TOKEN}" } } # Configure TLS verify_incoming = true verify_outgoing = true verify_server_hostname = true ca_file = "consul-agent-ca.pem" auto_encrypt = { tls = true } # retry_join (Cloud Auto-Join) retry_join = ["provider=aws tag_key=${CONSUL_AUTO_JOIN_TAG_KEY} tag_value=${CONSUL_AUTO_JOIN_TAG_VALUE}"]Configure application registration with Consul

Use the provided templates to register the application with Consul. Make appropriate changes to the files so that they match your own environment configuration.

packer/app-1.pkr.hcl... ... // Upload App Service registration file to Consul Node provisioner "file" { source = "./config/templates/service-registration.hcl.tmpl" destination = "/tmp/service-registration.hcl.tmpl" } // Copy Service Registration Config to Consul config dir provisioner "shell" { inline = [ "sudo -u consul bash -c 'cp /tmp/service-registration.hcl.tmpl /etc/consul.d/service-registration.hcl.tmpl'" ] } ... ...packer/config/templates/service-registration.hcl.tmplservice { name = "${SERVICE_NAME}" port = ${SERVICE_PORT} token = ${SERVICE_TOKEN} }Configure health checks with Consul

Copy the application health check configuration file to the Consul configuration directory.

packer/app-1.pkr.hcl... ... // Copy App-1 Service Health Check template file to Consul node provisioner "file" { source = "./config/templates/health-check.hcl.tmpl" destination = "/tmp/health-check.hcl.tmpl" } // Register Service Health Check provisioner "shell" { inline = [ "sudo -u consul bash -c 'cp /tmp/health-check.hcl.tpl /etc/consul.d/health-check.hcl.tpl'" ] } ... ...This is the health check configuration template file. This could be different depending on your environment, so make the required changes.

packer/config/templates/health-check.hcl.tmplcheck = { id = "${SERVICE_NAME}-api" name = "${SERVICE_NAME}/health" http = "http://localhost:${SERVICE_PORT}/health" tls_skip_verify = true disable_redirects = true interval = "10s" timeout = "1s" token = "${SERVICE_TOKEN}" }Create an AMI and register it with HCP Packer

Set the HCP Packer registry configuration with options such as

bucket_name,bucket_labels, andbuild_labels.packer/app-1.pkr.hclbuild { // Set HCP Packer hcp_packer_registry { bucket_name = "${var.service_name}" description = "${var.service_name} Image with Consul Client." bucket_labels = { "owner" = "${var.service_name}-DevTeam" "os" = "AmazonLinux", "AmazonLinux-version" = "AL2", "Hashi-Product" = "Consul Client" "Application" = "${var.service_name}" } build_labels = { "build-time" = timestamp() "build-source" = basename(path.cwd) } } ... ...Assign a new image to the HCP Packer "test" channel

Install Terraform on a self-hosted runner. Execute Terraform, which will assign the image to the "test" channel in HCP Packer.

.github/workflows/app-1.yaml... ... # Install the latest version of Terraform CLI and # Configure the Terraform CLI configuration file with a HCP Terraform user API token - name: Setup-Terraform uses: hashicorp/setup-terraform@v3 with: cli_config_credentials_token: ${{ env.TF_API_TOKEN }} - name: assign-image-to-channel run: | cd terraform/assign-image-to-channel terraform init terraform apply -var="service-name=${{ vars.APP_NAME }}" -var="env=${{ env.branch }}" -auto-approve ... ...Update the "test" AWS launch template with the new AMI

Execute Terraform that updates with the new AMI retrieved from the HCP Packer "test" channel of the "app-1" bucket.

.github/workflows/app-1.yaml... ... needs: [ assign-image-to-packer-channel ] name: deploy runs-on: self-hosted steps: - name: terraform update aws launch template run: | cd terraform/launch-template/${{ env.branch }} env echo "Running terraform.... creating launch template..." terraform init terraform apply -auto-approve ... ...Your Terraform configuration for the launch template should look akin to what is shown in the code below:

terraform/launch-template/test/lt-app-1.tf... ... data "hcp_packer_artifact" "app-1-image" { bucket_name = "app-1" platform = "aws" region = "us-east-1" channel_name = "test" } resource "aws_launch_template" "lt" { name = "lt_app-1-test" image_id = data.hcp_packer_artifact.app-1-image.external_identifier instance_type = "${var.instance_type}" key_name = "${var.key_pair_name}" iam_instance_profile { name = "consul-auto-join" } network_interfaces { security_groups = var.security_groups } user_data = filebase64("./config/app-1.sh") } ... ...Deploy the application to the "test" environment - refresh AWS ASG

This executes an AWS CLI command to refresh the autoscaling group. For more details, refer to "Introducing Instance Refresh for EC2 Auto Scaling" and "Start an instance refresh using the AWS Management Console or AWS CLI".

.github/workflows/app-1.yaml... ... - name: Deploy new version - refresh ASG run: | cd terraform/asg/${{ env.branch }} aws autoscaling start-instance-refresh --cli-input-json file://config.json ... ...You can customize various preferences that affect the instance refresh. For more information, refer to "Understand the default values for an instance refresh".

terraform/asg/test/config.json{ "AutoScalingGroupName": "asg-app-1-test", "Preferences": { "InstanceWarmup": 60, "MinHealthyPercentage": 50, "AutoRollback": false, "ScaleInProtectedInstances": "Ignore", "StandbyInstances": "Terminate" } }Create test infrastructure

Create appropriate Terraform configuration file(s) to spin up a test environment as needed by your application. In this example, it is assumed that the Terraform configuration files are in the

terraform/qa/infradirectory..github/workflows/app-1.yaml... ... if: ${{ github.ref_name == 'test' }} # Run test in test only name: app-testing runs-on: self-hosted steps: ... ... - name: Create Test environment run: | cd terraform/qa/infra terraform init terraform apply -auto-approve ... ...Perform testing

Perform the necessary testing of your application. In this example, it is assumed that the testing scripts are in the

terraform/qa/testingdirectory..github/workflows/app-1.yaml... ... - name: Testing if: ${{ github.ref_name == 'test' }} run: | cd terraform/qa/testing ./run-tests.sh ... ...Destroy the test infrastructure

After all tests are complete and passing, destroy the test infrastructure.

.github/workflows/app-1.yaml... ... - name: Destroy Test env if: ${{ github.ref_name == 'test' }} run: | cd terraform/qa/infra terraform destroy ... ...

Check test results

If the tests failed, a developer should go back and work on addressing any issues that arose, until all tests are in a passing state. If all of the tests pass, then the CI/CD workflow will continue.

Pull request procedure

Create a new pull request, this time to review and merge code from the "test" branch into a "main" branch.

After peer review is complete, approve the new changes. Then, merge the branch into "main", which will automatically kick off the workflow you walked through earlier, this time utilizing the "main" branch.

Kick off the CI pipeline

The CI/CD workflow steps are similar to what is discussed above, but this time:

- The "main" branch is being used,

- An additional "Create a release" step is being executed, and

- Test-related steps are not required, and thus not executed.

A sequence of steps will take place:

Compile/Build the application.

Create a release.

The 'Create a release' step triggers a release. This creates a tag and uploads the application artifacts to GitHub as a release.

.github/workflows/app-1.yaml... ... release_app: needs: [ compile_and_build_app ] if: ${{ github.ref_name == 'main' }} # Execute only when merging to main branch runs-on: self-hosted steps: - name: Create a Release & Upload artifacts id: create_release uses: ncipollo/release-action@v1 with: tag: v-${{ github.run_number }} name: Release v-${{ github.run_number }} artifacts: "./artifacts/${{ env.branch }}/latest/${{ vars.APP_NAME }}.zip" makeLatest: "true" ... ...Retrieve secrets from HashiCorp Vault (

kv/app-1/prod).Get a reference to the golden OS image from HCP Packer - 'prod' channel.

Install the application binary and configuration file.

Install the Consul binary and agent configuration file.

Configure application registration with Consul.

Configure health checks with Consul.

Create an AMI and register it with HCP Packer.

Assign the new image to HCP Packer - 'prod' channel.

Update the "prod" AWS launch template with the new AMI.

Deploy your application in the "prod" environment - refresh AWS ASG.

Upon successful completion of the pipeline, the application in production will be running the latest version of the application, deployed on the most recently approved version of the operating system. Any future updates to the application will automatically trigger this CI/CD pipeline, ensuring seamless updates to the production environment.