HCP Terraform Operator for Kubernetes

The HCP Terraform Operator leverages the CNCF operator framework to enable the management of HCP Terraform resources through Kubernetes Custom Resources.

This operator introduces several Custom Resources tailored for the seamless management of HCP Terraform resources:

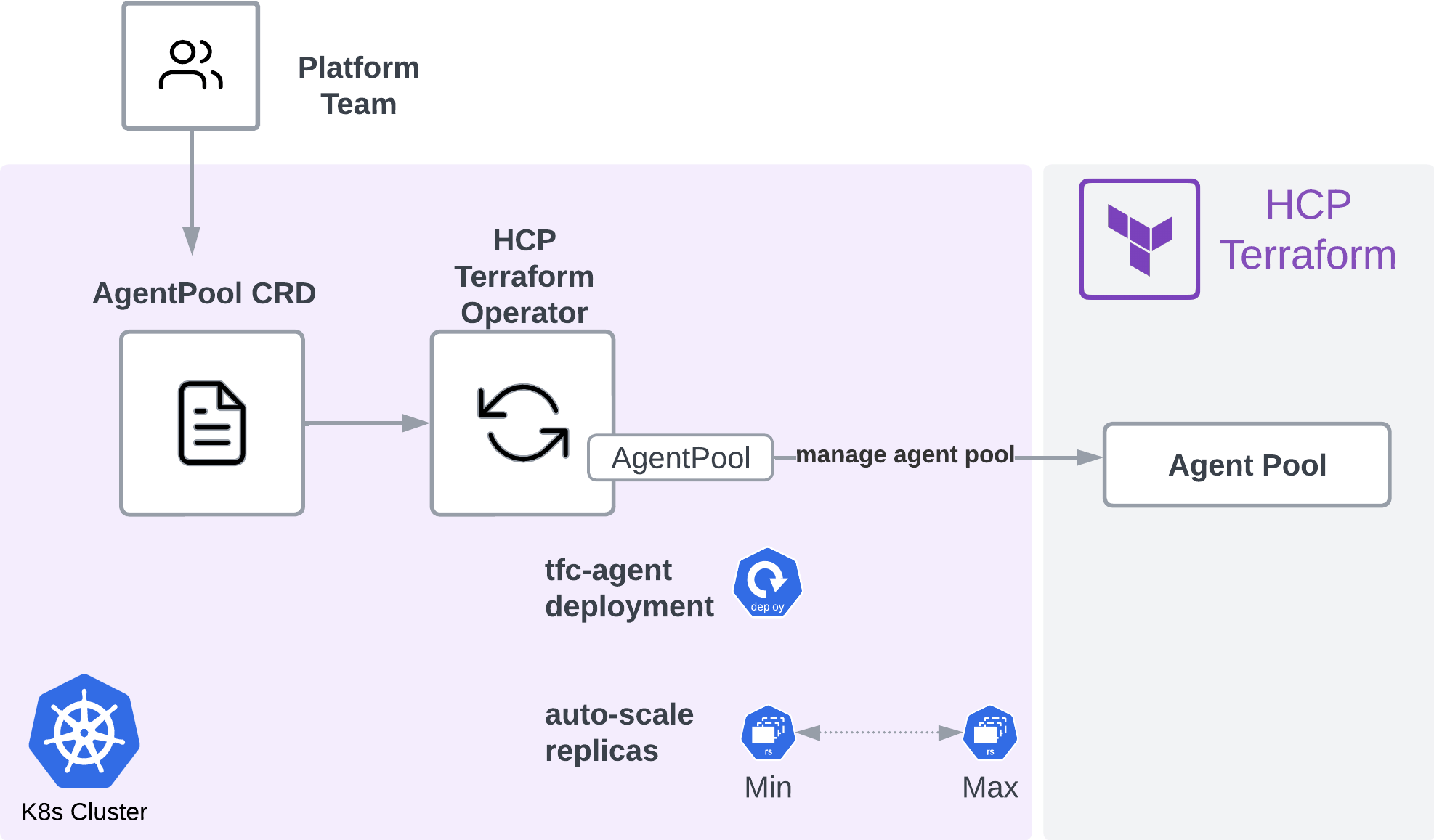

- AgentPool: This resource manages HCP Terraform Agent Pools and Agent Tokens. It also supports on-demand scaling operations for HCP Terraform agents.

- Module: Facilitates the implementation of API-driven Run Workflows, streamlining the execution of Terraform configurations.

- Project: Oversees the management of HCP Terraform Projects, allowing for organized and efficient project handling within the Terraform ecosystem.

- Workspace: Handles the management of HCP Terraform Workspaces, providing a structured environment for resource provisioning and state management.

HCP Terraform Operator Use Cases

The HCP Terraform Operator has two main use cases that we’ll describe in the rest of the section:

- Auto-scaling agent pools for HCP Terraform

- Self-service infrastructure via Kubernetes native consumption

Auto-scaling agent pools for HCP Terraform

One of the primary use cases of the Terraform Operator is to provide platform teams with the ability to deploy and autoscale HCP Terraform agent pools on Kubernetes. The operator manages the lifecycle of agent deployment and registration via the AgentPool custom resource and can optionally monitor workspace queues to trigger autoscaling based on defined min and max replicas.

You may increase the maximum number of agents up to the value defined in autoscaling.maxReplicas or the licensed limit, depending on which limit is reached first. If there are no pending runs, the HCP Terraform Operator will reduce the number of agents to the specified value in autoscaling.minReplicas within the timeframe of autoscaling.cooldownPeriodSeconds. This mechanism allows you to only deploy additional agents when needed, and control costs.

Egress considerations

The network egress requirements for the HCP Terraform agent apply to agents deployed using the HCP Terraform Operator for Kubernetes. These requirements cover the following areas:

- Provider endpoint connectivity

- Access to the Terraform registry

- Access to Terraform releases

See the following HCP Terraform agent documentation sections for details on the agent’s connectivity requirements:

- HCP Terraform agent connectivity requirements with HCP Terraform

- HCP Terraform agent connectivity requirements with Terraform Enterprise

Recommendations

HashiCorp recommends:

- Using a dedicated Kubernetes cluster or logical node separation to host HCP Terraform agents

- Use Kubernetes cluster autoscaling where available to manage cluster capacity dynamically. This recommendation is particularly applicable if you are using autoscaling agent pools and there is a high variance between peak and off-peak HCP Terraform run concurrency.

- Considering baseline run concurrency for health checks (drift detection and continuous validation) to set the autoscaling

minReplicasparameter. - Scale test your Kubernetes cluster up to your maximum intended concurrency and ensure sufficient capacity and dynamic scaling is handled under load.

Sizing autoscaling AgentPools

For the HCP Terraform Kubernetes operator agent pools, establishing the right minimum and maximum replicas hinges on balancing your infrastructure's run concurrency needs and cost-efficiency requirements. The maximum replica count should be determined by predicting peak-run concurrency demand and any constraints imposed by your HCP Terraform tier. Your Kubernetes cluster should be scaled-tested to ensure the peak load requirement of all replicas actively executing is handled

AgentPool CRD

For detailed AgentPool CRD documentation see link below. https://github.com/hashicorp/terraform-cloud-operator/blob/main/docs/agentpool.md

Self-Service infrastructure via Kubernetes native consumption

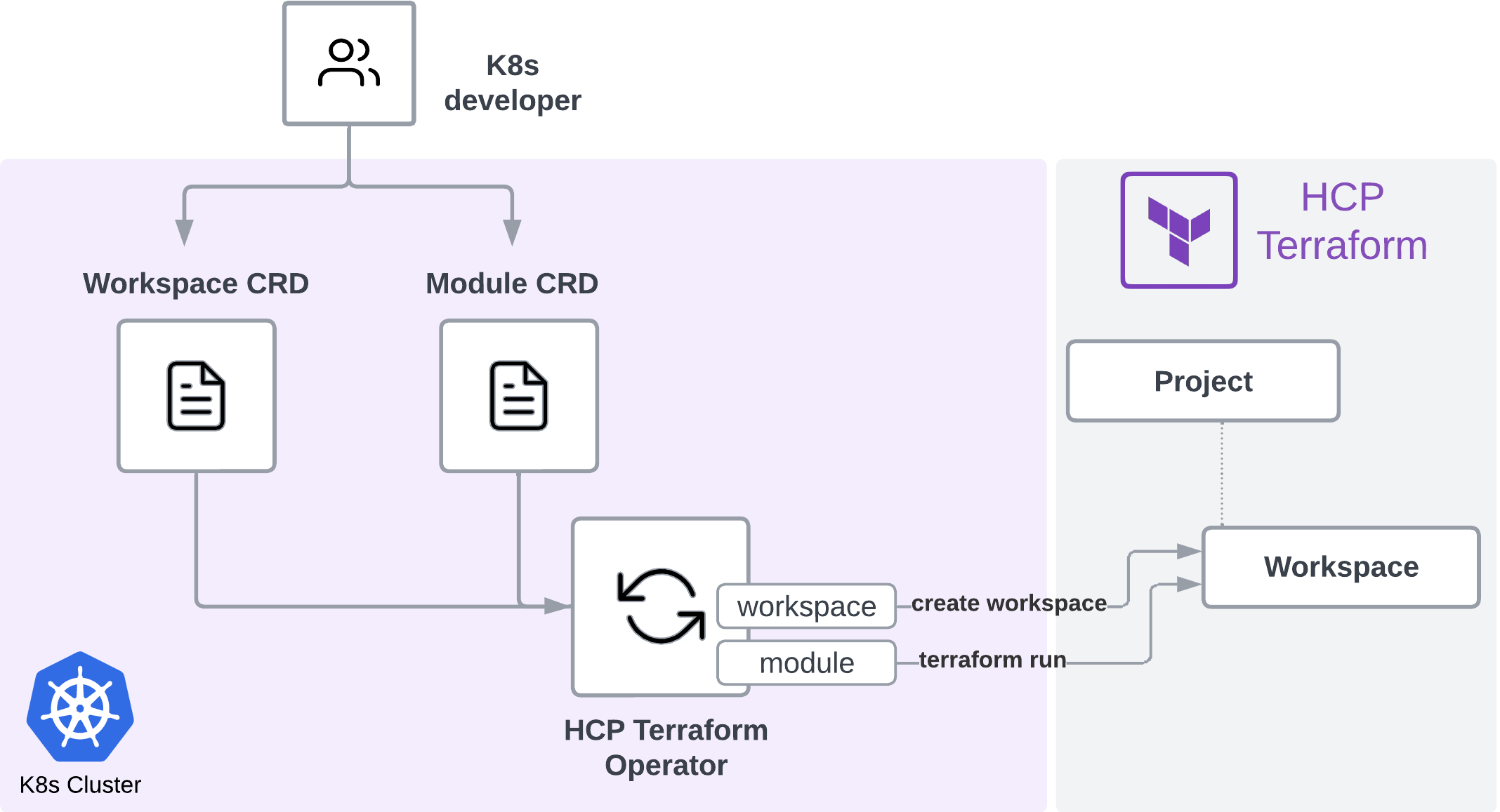

The HCP Terraform Operator for Kubernetes lets application developers define infrastructure configuration using Kubernetes configuration files. It delegates the reconciliation phase to HCP Terraform, which excels in infrastructure management. This frees developers from needing to learn the HashiCorp Configuration Language (HCL) for managing infrastructure tasks.

The diagram above illustrates the consumption workflow for a Kubernetes developer: The workspace CRD allows for workspace management and the module CRD allows for Terraform run execution control. Commonly, these Kubernetes resources will be consumed through templates using continuous delivery tools such as ArgoCD, which support GitOps practices.

The workspace controller allows for self-service consumption of a workspace and creation within a project. This allows Kubernetes developers to self-serve workspaces within the guardrails of a HCP Terraform project. Through inheritance, this provides a consistent approach for key infrastructure security controls for cloud credentials and policy as code even though the consumption is exposed via Kubernetes.

The module controller provides run execution against a workspace using an API-driven workflow and a Terraform module source. This allows for the separation of producer and consumer such that the consumers within Kubernetes do not need to know Hashicorp Configuration Language (HCL) and can consume approved infrastructure patterns via Kubernetes CRDs and YAML. Modules can be sourced from the HCP Terraform private registry and the module CRD allows run execution control via API-driven workflow.

Recommendations

HashiCorp recommends:

- Using a single tenant Kubernetes cluster when providing self-service infrastructure with the HCP Terraform Operator.

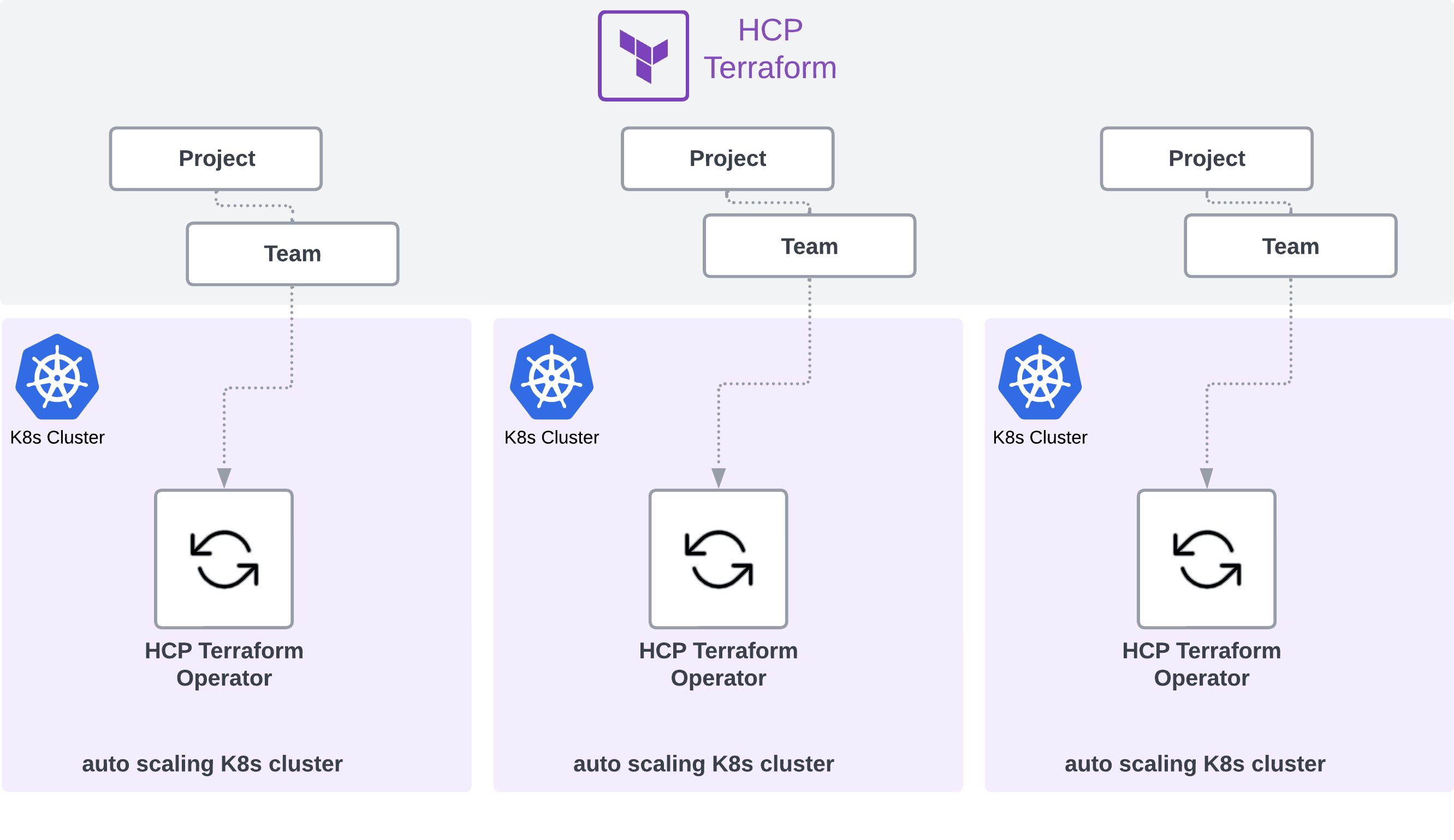

- Deploying a single HCP Terraform Operator per Kubernetes cluster and HCP Terraform platform team.

- Using unique (dedicated) team tokens per HCP Terraform Operator and Kubernetes cluster combination.

- Leveraging the autoscaling function of Kubernetes cluster, if that function is available (managed services such as EKS Fargate, AKS auto scaler, GKE autopilot do include that function).

When using the HCP Terraform Operator to enable self-service infrastructure for Kubernetes developers the recommended approach is to leverage a dedicated cluster per HCP Terraform project; this approach simplifies scaling and security isolation and allows for team-based cost allocation. Auto-scaling clusters are preferable to simplify capacity management.

Installing the Operator

The operator is installed in your Kubernetes cluster using a Helm chart.

You can install the HCP Terraform operator using Helm CLI directly or using the Terraform helm provider.

Instructions on how to install the operator can be found on our documentation site: Set up the HCP Terraform Operator for Kubernetes.

Security considerations

Token requirements

The HCP Terraform Operator resources utilize a Kubernetes secret to reference a HCP Terraform token (see Vault Secrets Operator with HCP Terraform Operator to improve your security posture).

Example secret reference AgentPool CRD

A secret is referenced using secretKeyRef in the AgentPool CRD

token:

secretKeyRef:

name: tfc-operator

key: token

AgentPool Controller

The AgentPool requires a HCP Terraform token to be generated, this can be referenced using a Kubernetes secret.

Permissions required

| Functionality required | Team Permissions |

|---|---|

| Create and manage agent pools | Manage agent pools (organization-level permission) |

Module and Workspace Controller

The module and workspace controllers are targeted at an application consumer or developer persona for self-service infrastructure. To enable self-service with controls within a project the recommended approach is for platform teams to create a project and team access for each team as part of the onboarding process for an application team, this project can be managed by the platform teams either using the TFE provider or using the project controller via the HCP Terraform Operator.

Consumers can create their workspaces within their project by referencing the project id from within the workspace CRD. Specifying a project id enables self-service with the separation of teams via HCP Terraform projects, additionally, this also enables inherited configuration for credentials and policy.

| Functionality required | Team Permissions |

|---|---|

| Create and manage workspaces within a project | Team Token Project Team Access Maintain - Full control of everything in the project, but not the project itself |

Project Controller

The project controller provides the ability to deploy and manage HCP Terraform projects. As this is typically a platform team function and requires higher access, if using the controller this will be by a separate HCP Terraform operator that is dedicated to the platform team.

| Functionality required | Team Permissions |

|---|---|

| Create and manage projects and team access | Owners Team Token |

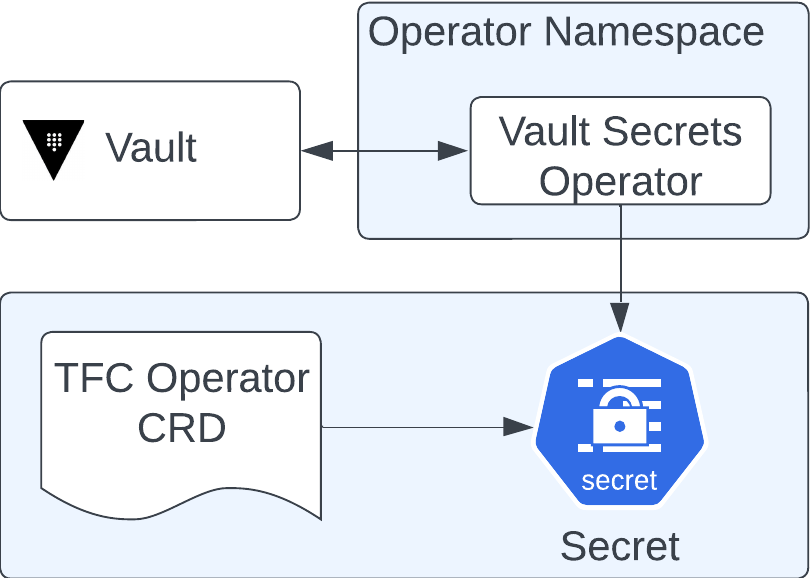

Vault Secrets Operator with HCP Terraform Operator

The Vault Secrets Operator allows for secrets to be synced between Vault and the Kubernetes secrets into a specified namespace. Within that namespace, applications have access to the secrets. See https://github.com/hashicorp/vault-secrets-operator

The HCP Terraform operator reads in a HCP Terraform token via the CRD from a referenced Kubernetes secret.

Observability

The Operator exposes metrics in the Prometheus format for each controller. They are available at the standard /metrics path over the HTTPS port 8443. The Operator exposes all metrics provided by the controller-runtime by default. The full list you can find on the Kubebuilder documentation.

HCP Terraform Agent Metrics can be collected using OpenTelemetry. When using the HCP Terraform Operator the environment key can be utilized to set TFC_AGENT_OTLP_ADDRESS variable (see https://developer.hashicorp.com/terraform/cloud-docs/agents/telemetry).