Dynamic credentials

One of the key features of Boundary is its ability to broker or inject dynamic credentials through integration with an external credential management system - HashiCorp Vault. This capability is facilitated by dynamic credential stores, which provide on-demand, ephemeral credentials to users. Dynamic credential stores in Boundary enable the secure generation and management of temporary, time-bound credentials that are used to access various targets like databases, SSH servers, and other services. Unlike static credentials that are long-lived and manually managed, dynamic credentials are created just in time and automatically expire after a specified period, reducing the risk of credential leakage and minimizing the window of opportunity for unauthorized access.

The integration between Boundary and Vault improves two main areas of concern for organizations:

- Security posture for remote access

- Workflow efficiency

Integrating Boundary and Vault achieves these goals by enabling end-users to access targets without needing to manually distribute credentials.

Organizations should configure credentials to be dynamic and ephemeral by attaching a specific time to live (TTL) to the credential. This provides the highest level of security by applying a finite amount of time for those credentials to be used.

Timely access to resources creates improvements in workflow efficiency by removing manual approvals for access in favor of highly scoped, preconfigured access requests. Additional end-workflow improvements are added by removing credential management from the end user.

When integrated with Vault, Boundary has to be assigned a periodic, renewable, orphan token from Vault. Each credential store needs a separate Vault token.

The following have no impact on Vault's client count:

- The number of Boundary targets that source credentials from the stores

- The number of users connecting to the targets

- The number of sessions that get created

- The number of credential libraries the credential store contains

Leveraging dynamic credentials

End users have three workflows that can be operationalised for connecting to a target:

Traditional Authentication - when an end user connects to a target, Boundary initiates the session, but the end user must know the credentials to authenticate into the session. This workflow is available for testing purposes, but it is not recommended because it places the burden on the users to securely store and manage credentials.

Credential Brokering - credentials are retrieved from a credentials store and returned back to the end user. The end user then enters the credentials into the session when prompted by the target. This workflow is more secure than the first workflow since credentials are centrally managed through Boundary. For more information, see the credential brokering concepts page.

Credential Injection (Recommended) - credentials are retrieved from a credential store and injected directly into the session on behalf of the end user. This workflow is the most secure because credentials are not exposed to the end user, reducing the chances of a leaked credential. This workflow is also more streamlined as the user goes through a passwordless experience. For more information, see the credential injection concepts page.

Vault and Boundary for dynamic credentials.

HashiCorp recommends utilizing Terraform to provision Boundary clusters (HCP or self-managed), create organizations, and projects, and manage all Boundary resources. The Vault cluster and its resources should be provisioned in the same manner using the Vault Terraform provider).

Connecting from Boundary to private Vault

When connecting HCP Boundary to a private Vault cluster it requires connectivity via an HCP worker. When setting up an HCP worker, it's important to create a worker filter for the credential store. A worker filter will identify workers that should be used as proxies for the new credential store, and ensure these credentials are brokered from the private Vault.

Example worker configuration

worker {

auth_storage_path = "./hcp-worker1"

tags {

type = ["worker", "vault"]

}

}

Create a new vault credential store with a worker filter.

Example command for creating a new vault credential store with a worker filter

boundary credential-stores create vault -scope-id $PROJECT_ID -vault-address $VAULT_ADDR -vault-token $CRED_STORE_TOKEN -worker-filter='"vault" in "/tags /type"'

In the scenario of self-managed Boundary Enterprise, worker filters may not be required since workers will be provisioned in private networks accessible from Vault.

Boundary organizations, projects, and Vault namespaces.

Vault namespaces are an Vault Enterprise feature that enables isolated Vaults. It provides separate login paths and supports creating and managing data isolated to their namespace. This functionality enables you to provide Vault as a service to tenants.

Everything in Vault is path-based. Each path corresponds to an operation or secret in Vault, and the Vault API endpoints map to these paths; therefore, writing policies configures the permitted operations to specific secret paths. For example, to grant access to manage tokens in the root namespace, the policy path is auth/token/\. Managing tokens for a namespace named "education" would be at the following path, education/auth/token/\.

Namespace's support secure multi-tenancy (SMT) within a single Vault Enterprise instance with tenant isolation and administration delegation so Vault administrators can empower delegates to manage their own tenant environment. When you create a namespace, you establish an isolated environment with separate login paths that function as a "mini-Vault" instance within your Vault installation. Users can then create and manage their sensitive data within the confines of that namespace. Reference Vault Namespaces for additional information.

Boundary uses the concept of scopes in its domain model. There are three levels of scopes in Boundary where each scope can have multiple instances of its child scopes

- Global (Highest level scope)

- Organization (Intermediate level scope)

- Project (Lowest level scope)

- Organization (Intermediate level scope)

While designing the integration between Boundary and Vault, there are some key factors to consider:

| REQUIREMENTS | CONSIDERATIONS |

|---|---|

| Organizational Structure | What is your organizational structure? What is the level of granularity across lines of businesses (LOBs), divisions, teams, services, and apps that need to be reflected in your Boundary and Vault’s end-state design from an organizational perspective? |

| Self Service Requirements | Given your organizational structure, what is the desired level of self-service required? How are Boundary Credential stores to be segregated and mapped to host catalogs? How are Vault policies to be managed? Will teams need to directly manage policies for their own scope of responsibility, i.e. credential stores? |

| Audit Requirements | What are the requirements around auditing usage of Vault credentials within your organization? |

| Secrets engine requirements | What types of secret engines will you use in your credential stores (KV, database, SSH, PKI, etc.)? For large organizations, each of these might require different structuring patterns. For example, with the KV secrets engine, each team might have its own dedicated KV mount. |

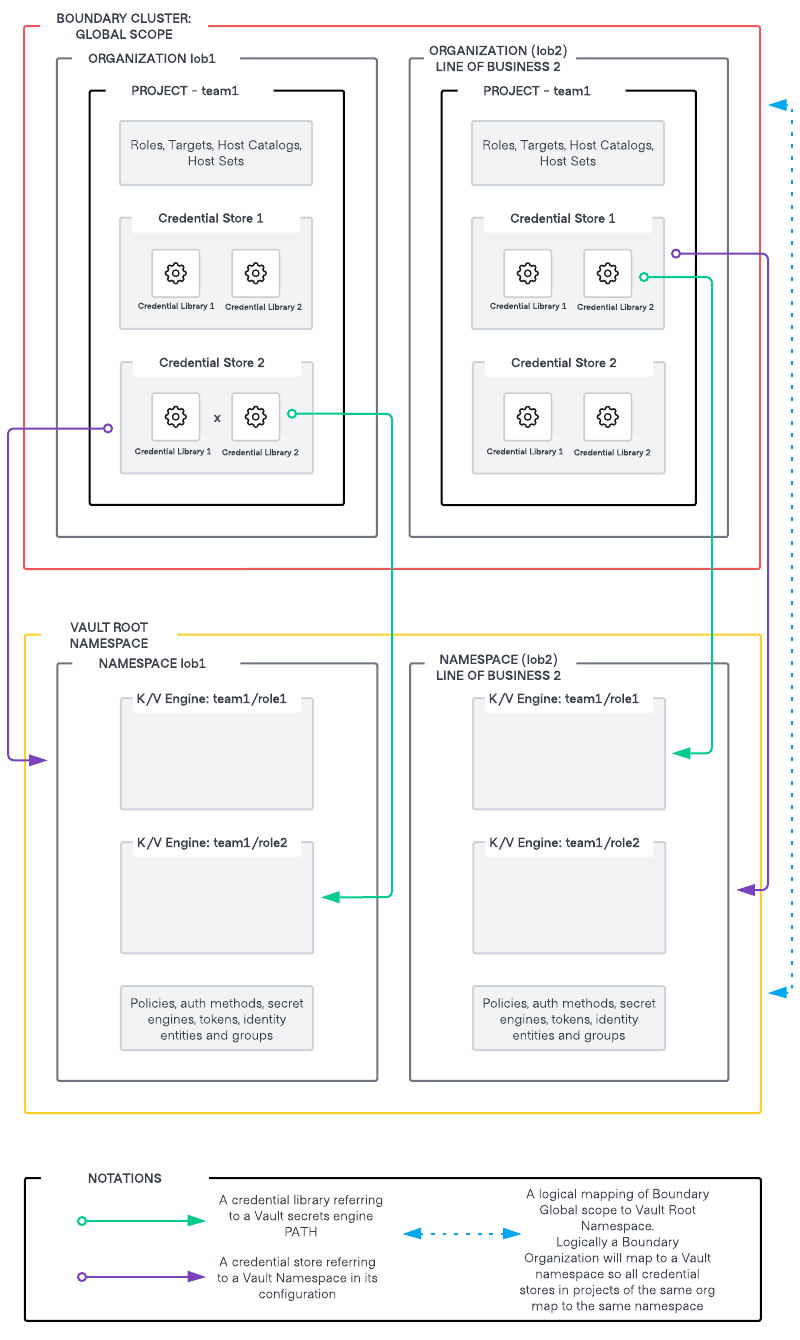

Considering the above factors, a recommended pattern for mapping Vault Organizations and Namespaces to a Boundary global scope is as follows:

- Logically, a Boundary global Scope will map to the Vault root namespace.

- Each line of business [LOB] will have their dedicated organization in Boundary and a dedicated namespace in Vault. Logically an organization in Boundary will map to a namespace in Vault. As you cannot associate Vault’s namespace at a Boundary organization scope, hence this mapping is termed as logical.

- All credential stores created in projects that are part of the same organization will refer to the same namespace in Vault for secrets.

This can further be broken down into hierarchical namespaces within Vault and Boundary credential stores mapping to specific child namespaces within Vault. It is important to keep in mind the principle of least privilege while designing these constructs. - Credential libraries refer to a particular path in the said Vault namespace. You can have hierarchical paths in secret engines such as Kvv2 (folder structure) to have more granular control over the mapping of specific credential libraries in a credential store to specific paths within the same mount in Vault.

Additional recommendations on Vault namespace design are listed here

Vault credential TTL vs session TTL

Dynamic credentials generated from Vault, such as database credentials, have a time to live (TTL) associated with them. Credentials brokered into a session will forcefully terminate when the TTL for the dynamic credentials expires before the session timeout. If the session timeout is greater than the TTL of the dynamic credentials, the credentials are revoked when the session is terminated.

TTL’s are used for static credential sessions to control session termination whereas for dynamic credentials, which are brokered or injected, the credential TTL also dictates the session termination and must be considered while designing.

Boundary Credential Store Authentication to Vault

Boundary needs to lookup, renew, and revoke tokens and leases to broker credentials properly. This requires these minimum set of permissions for the token created for Boundary authentication to Vault.

- read

auth/token/lookup-self - update

auth/token/renew-self - update

auth/token/revoke-self - update

sys/leases/renew - update

sys/leases/revoke - update

sys/capabilities-self

It is important to create policies that give access to the Vault secret engines based on the principle of least privilege. For example, consider a database secrets engine created for managing PostgreSQL credentials. Since Boundary will only be reading credentials from the DB role, read access needs to be provided to only to the particular roles. In this scenario, the following will be the set of permissions required for a database engine mounted at the path database

- read

database/creds/<role_name>

Similarly for connecting to Kubernetes pods using Vault backed credentials, the following will be the set of permissions required for a Kubernetes secrets engine mounted at path kubernetes

- update

kubernetes/creds/<role_name>

Since the Vault token that is generated for Boundary needs to be periodic, orphan, and renewable, the following is an example to generate a Vault token based on the above specifications

vault token create \

-no-default-policy=true \

-policy=<policy1> \

-policy=<policy2> \

-orphan=true \

-period=<period> \

-renewable=true

How to scale this setup? Consider the scenario of multiple secret engines and multiple roles.

- Should we generate an orphan token for each credential store?

- Should we generate an orphan token for each type of database, secret engine, and/or mount and provide the token with permissions for all roles that are part of a mount?

- How should Boundary tokens be scoped?

- Should you create single boundary token for a project or organization?

Considering the multi-tenancy design for Boundary and Vault discussed in the previous section, one of the key considerations to keep in mind is how to manage the scope of the Vault orphan token associated with a Boundary credential store.

It is recommended to create a Vault orphan token in a specific Vault namespace that will be used exclusively by a Boundary project and can have policies attached to read/update the relevant Vault secret engines.

For example, two credential stores in a project that includes a Postgres credential store and an AWS credential store will require two different orphan tokens with their appropriate scopes, respectively.

Breaking this approach to scope orphan tokens to each credential store in a project makes it very difficult to scale as you would be stuck with the overhead of creating and managing orphan tokens for each credential store and managing Vault policies for each one of them which makes it very difficult when you have hundreds of credential stores referencing secret engines in Vault across multiple namespaces.

Credential injection

Credential injection is the process by which a credential is fetched from a credential store and then passed on to a worker for authentication to a remote machine. With credential injection, the user never sees the credential required to authenticate to the target. This provides a passwordless experience for the user, as the worker does both session establishment and authentication to the target on behalf of the user. This process differs from credential brokering, where credentials are returned to the user rather than injected into the session on worker nodes.

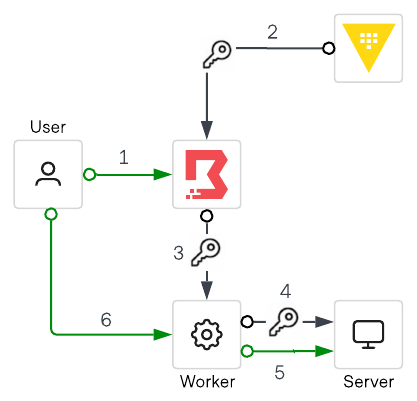

Consider a scenario where a user wants to access a target using SSH as shown in the diagram below. In this scenario, the user must authenticate to Boundary and must be authorized to access the remote host/ server. Once authorized, a credential is generated for the particular session and is injected directly into the session. This allows the user to establish a remote session with the target.

Credential injection works as follows:

- A user initiates a session to connect to a remote target by authenticating to Boundary and choosing the target.

- The Boundary controller requests a dynamic SSH credential to be generated by Vault for this particular session. Note that for private Vault, a self-managed HCP worker will help in the connectivity between the Boundary control plane and private Vault cluster.

- The Boundary controller provides those credentials back to the Boundary worker

- The Boundary worker then passes the credential to the target and authtenicates on behalf of the user

- The Boundary worker authenticates on behalf of the user. In this workflow, the user/client never has access to the credential.

- Once the credentials have been authenticated, a user session can be initiated.

Credential Injection supports both Static and Dynamic Credential stores. For further details on credential injection and associated security considerations, reference credential injection