Consul multi-cluster and multi-tenant deployments

Prerequisites

Consul Enterprise v1.15+ (LTS) is installed

Consul ACL is enabled

An ACL token with

operator=writeandacl=writeprivileges

Scope

- The scope of this section is limited to service discovery.

Consul multi-tenant

Managing a centrally operated Consul platform can present numerous operational challenges as you onboard more customers (applications) to your Consul platform. One key design pattern for scaling shared platforms is multi-tenancy: the ability to host and manage multiple customers on a common (shared) infrastructure. Delegating administrative privileges per tenant allows for self-service, thereby reducing dependency on the Consul platform team.

To effectively manage Consul, HashiCorp recommends a multi-tenancy architecture leveraging Consul’s multi-tenancy features: namespaces and admin partitions. This section will cover the details and provide recommendations.

Personas

It is a best practice to identify the key individuals responsible for designing and implementing Consul multi-tenancy, as well as the end-users of the system within your organization.

Consul platform engineer: manages the health and stability of the Consul platform, including the creation of namespaces and corresponding operator tokens for respective tenants

Security engineer: defines the roles and policies that namespace operators are allowed to create.

Application team:

Namespace operator: manages a tenant (or namespace) and creates policies, roles, and tokens for application developers

Application developer: registers services to Consul

Consul namespaces

Consul namespaces, an Enterprise feature introduced in version 1.7.0, allows for the isolation of data and configurations between tenants. A tenant can be a team, an application, or a user.

Multi-tenancy with namespaces

Namespace-level isolation eliminates the need for unique resource names, enabling self-service. Consul operators can delegate namespace-level operator privileges, allowing tenants to manage their own namespace without disrupting others.

Implementing namespaces

Design

Use the

defaultnamespace solely for administering Consul globally. Do not register services here.Determine namespaces based on access privileges, typically mapping to application teams.

Consult with your security team to define namespace-level roles and policies, such as operator, read-write users, and read-only users.

Deployment

- Refer to the step-by-step guides "Setup secure namespaces" and "Register and discover services within namespaces".

Limitations

Consul namespaces offer logical isolation but have limitations that may not meet all requirements:

Configurations such as proxy defaults are global and cannot be isolated per tenant. Teams must coordinate on global configuration changes.

IPs cannot overlap. While this provides robust isolation, namespaces still require non-overlapping IP ranges between tenants (e.g. Kubernetes IPs).

Complete tenant autonomy is not possible.

These limitations are manageable in small environments but can be cumbersome in large-scale settings. Consul introduced admin partitions to address these issues, which will be explored in the following section.

References

Consul admin partitions

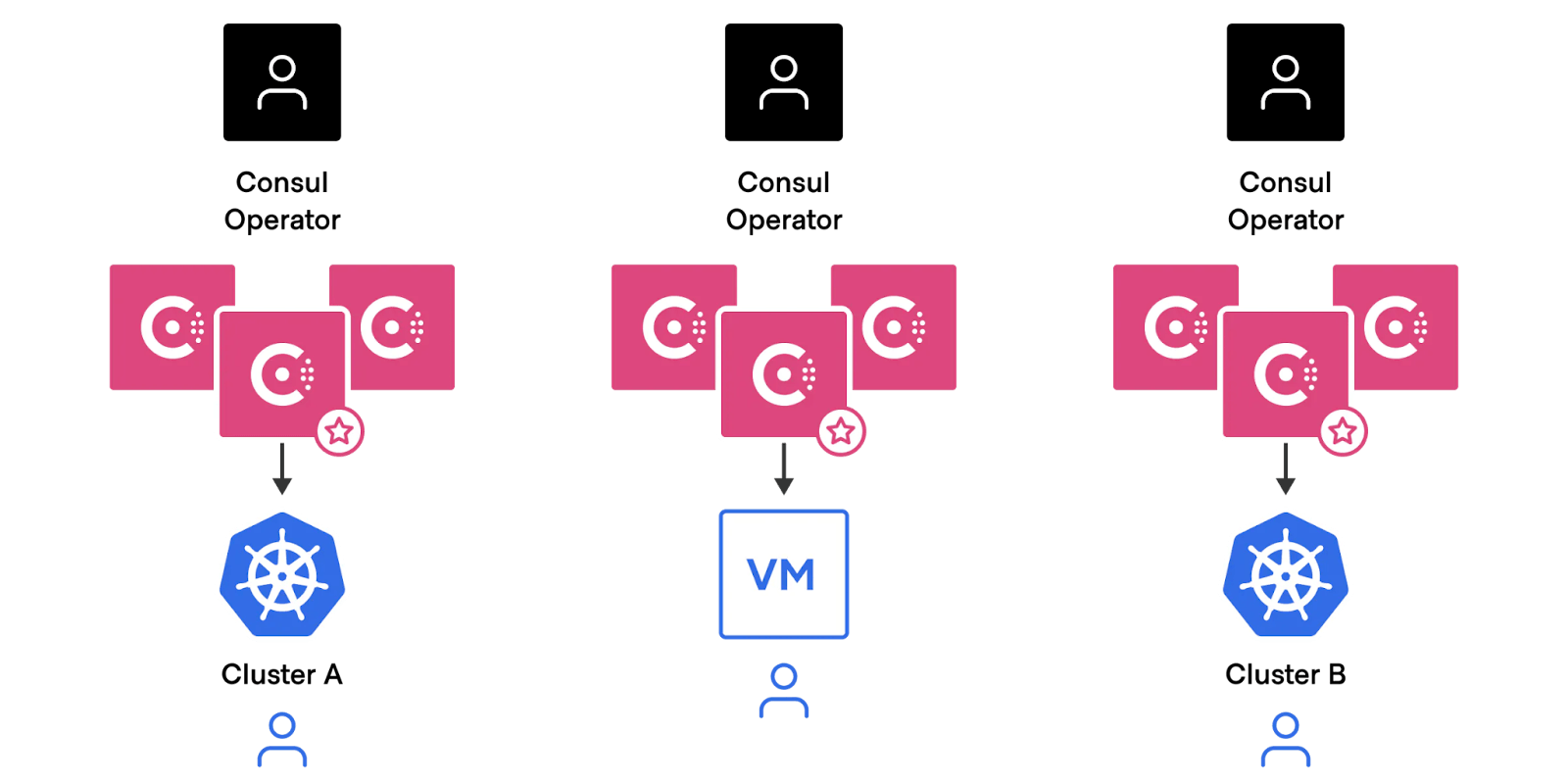

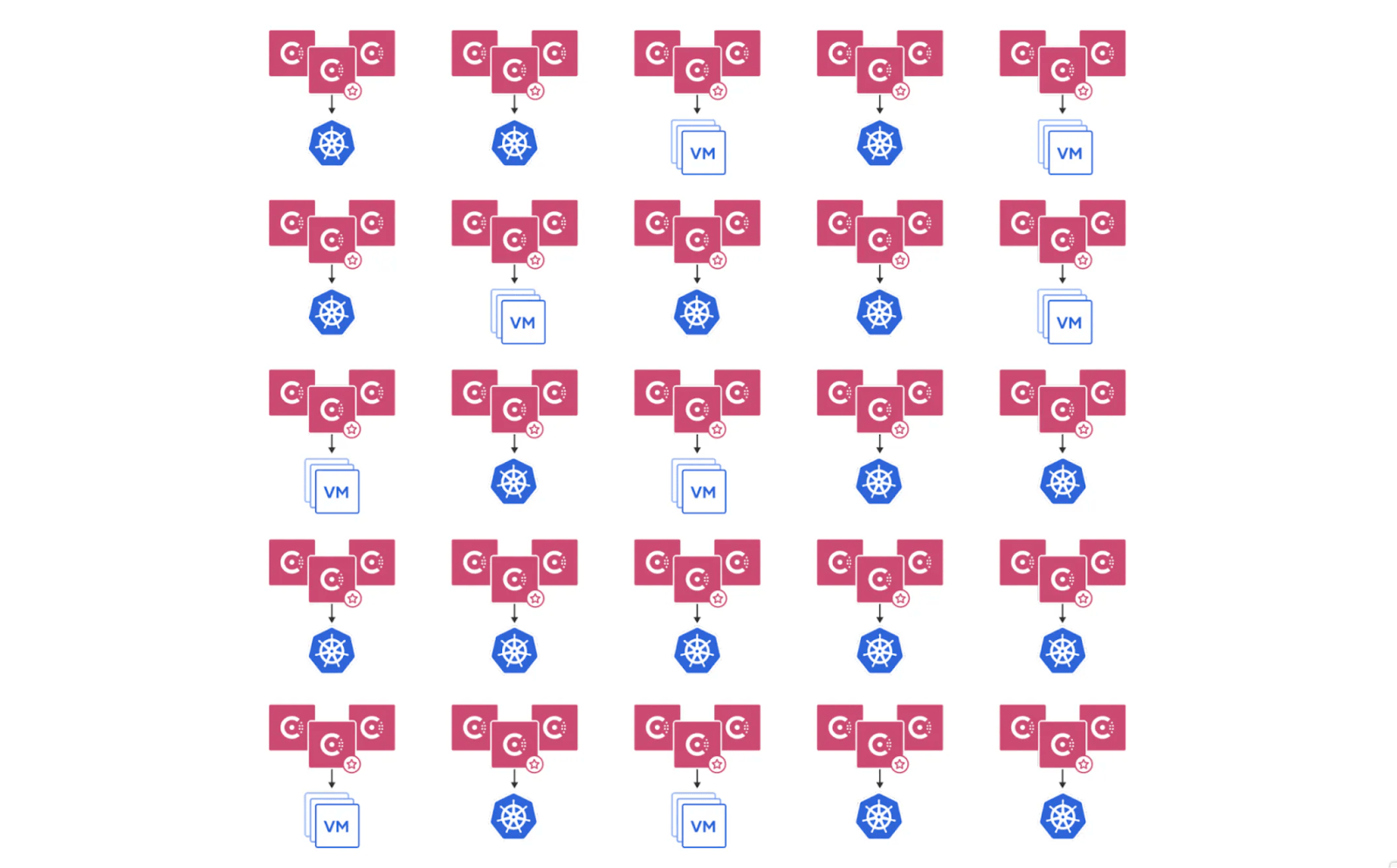

Organizations often deploy multiple Consul clusters to achieve full control and autonomy between tenants. Each cluster would be dedicated to a different team or service. This approach requires each team to manage both the data plane and the control plane independently.

While this approach is manageable with a few Consul clusters, it presents significant challenges in large scale deployments. These challenges include management overhead, resource constraints, governance and compliance issues, and high costs.

Multi-tenancy with admin partitions

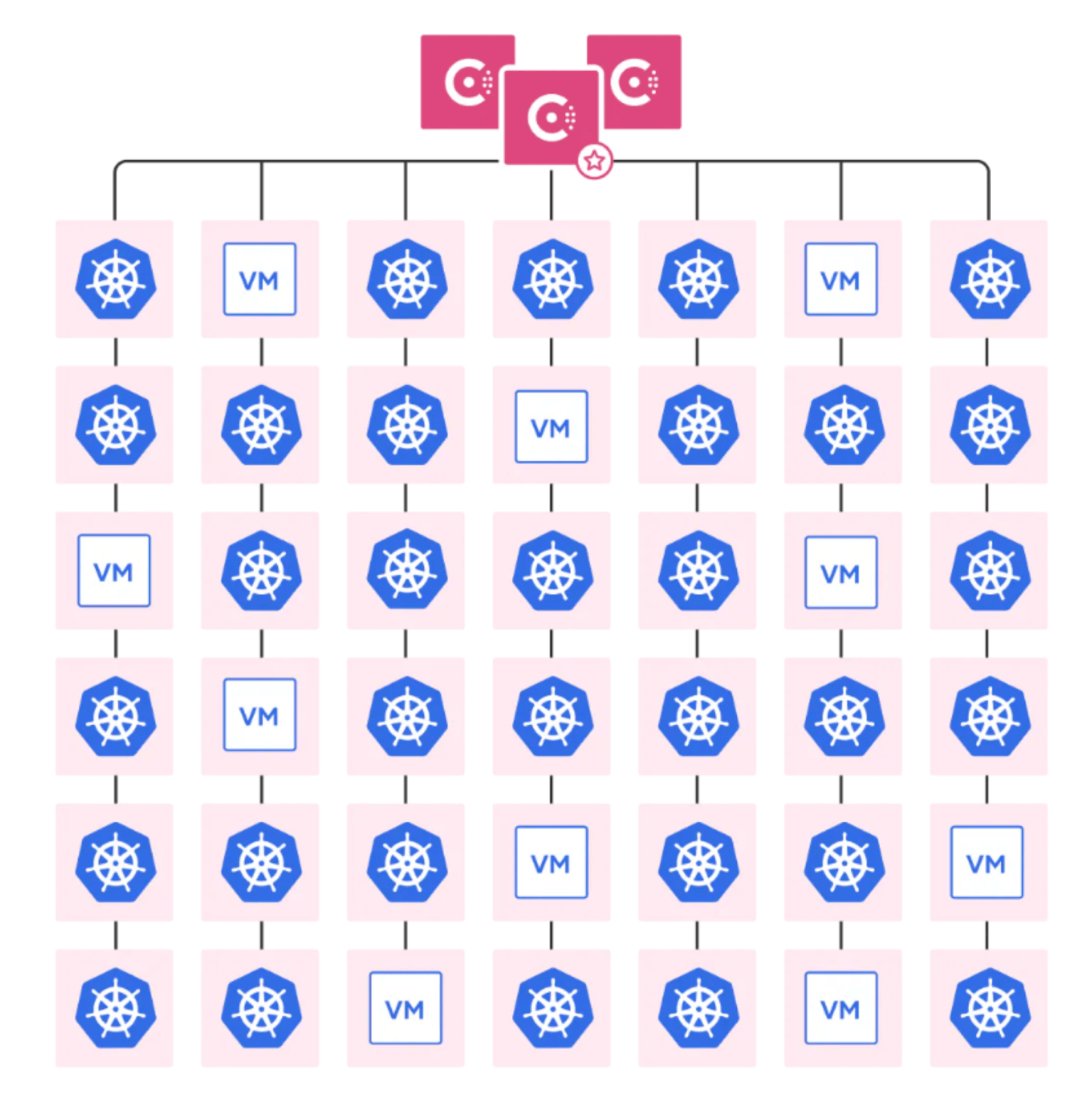

Introduced in Consul Enterprise v1.11, admin partitions provide stronger isolation between partitions, addressing the gaps found in namespaces and allowing for complete autonomy.

Consul platform operators can now create multiple partitions within a single data center. These admin partitions are fully isolated from one another while leveraging the same control plane. Each tenant can operate autonomously within an admin partition without the burden of managing the control plane.

Implementing admin partitions

Design

Determine admin partitions based on access privileges, typically mapping to application teams that are run and managed in their own environments or clusters (e.g., VPCs, Kubernetes)

Within each admin partition, operators can create multiple namespaces for additional separation to effectively manage their tenant/partition.

If services need to be discovered in other admin partitions, use

exported-services.Leverage Consul sameness groups:

Sameness groups designate a set of admin partitions as functionally identical within your network.

Adding an admin partition to a sameness group enables Consul to recognize services registered to remote partitions with cluster peering connections as instances of the same service when they share a name and namespace.

Deployment

- For detailed instructions on deploying admin partitions in an EKS environment, please refer to the hands-on tutorial titled “Multi-cluster applications with Consul Enterprise admin partitions”.

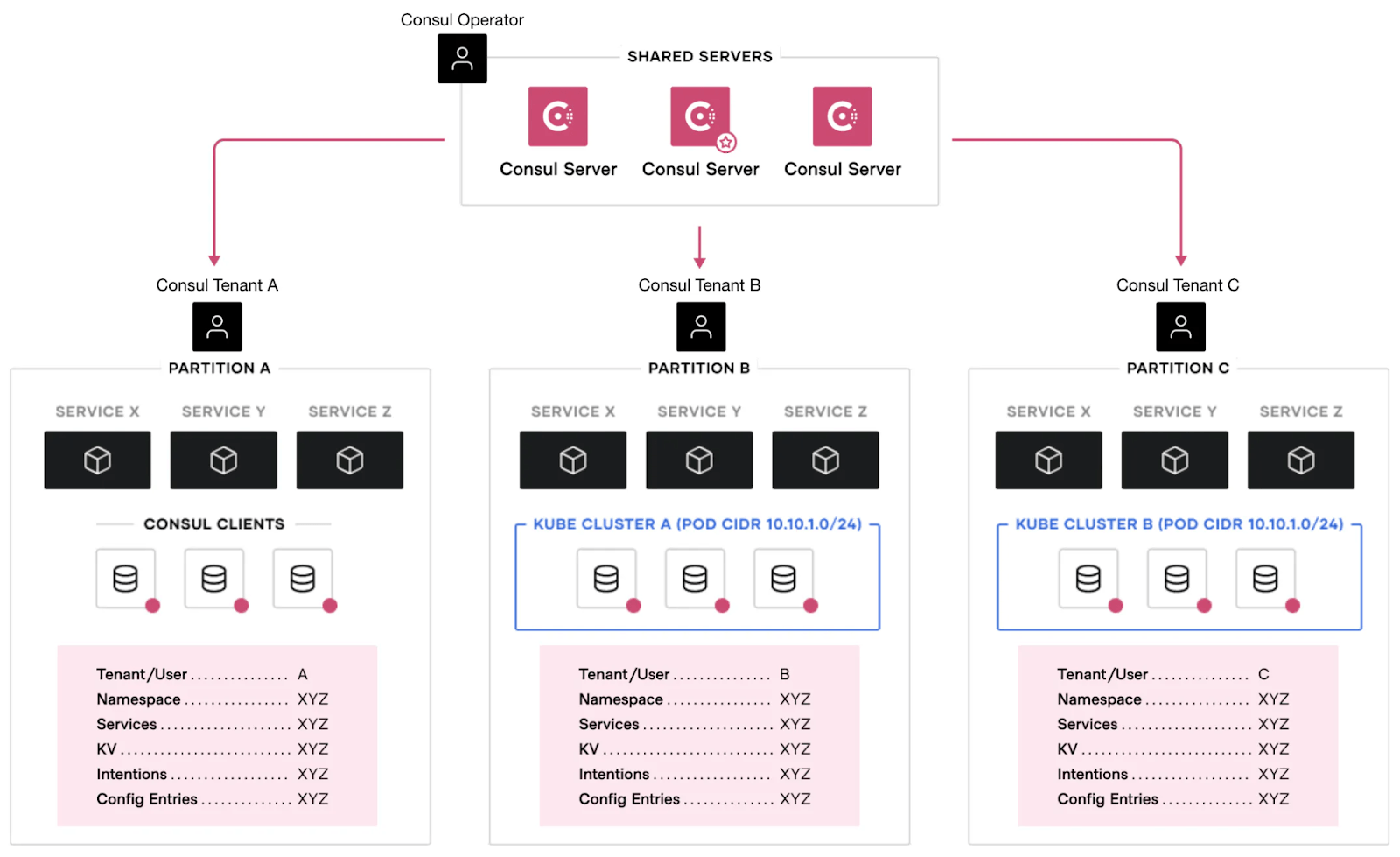

Architecture

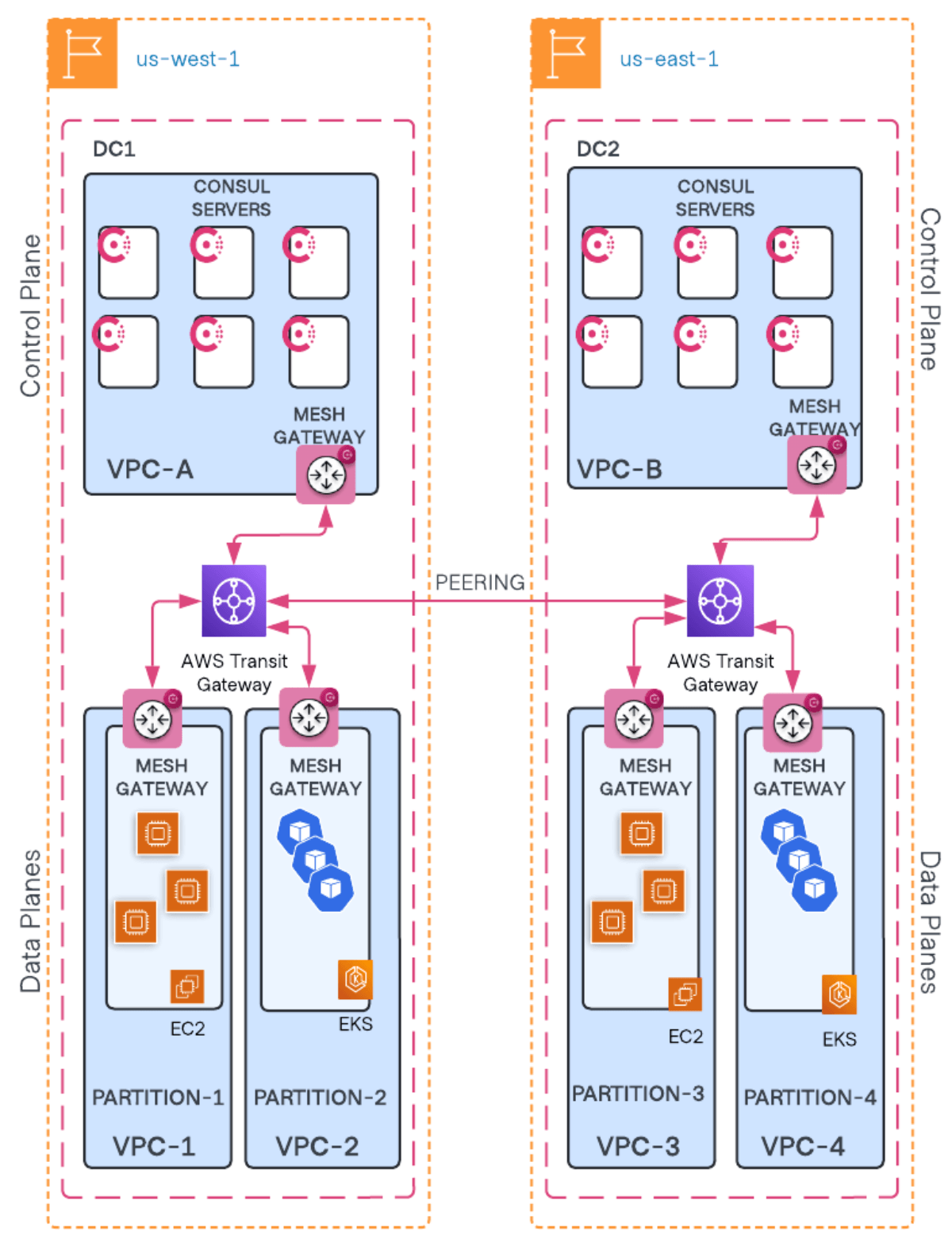

The diagram below illustrates an example of a multi-cluster application deployment using admin partitions.

In this setup, the Consul control plane connects to three clusters (data planes):

- One VM cluster (Partition A)

- Two Kubernetes clusters (Partitions B and C)

Each partition operates independently with its own users, namespaces, KV store, configurations, intentions, and services.

A complete HashiCorp recommended production deployment architecture can be found in the "Consul: Solution Design Guide".

References

Consul multi-cluster

The use of Consul namespaces along with admin partitions provides the necessary tenant-level isolation to achieve multi-tenancy. However, large enterprises often have additional requirements such as high availability, low latency, governance and compliance, and continued operations under DDIL (Denied, Disrupted, Intermittent, and Limited Impact).

High availability: Ensuring services are highly available and capable of automatic failover across clusters (including cross-region and cross-cloud providers) is critical to minimizing application downtime.

Low latency: For time-sensitive applications, low latency is essential. Co-locating Consul clusters near users may be necessary to achieve this.

Governance and compliance: Regulatory requirements, such as GDPR, mandate that data within Consul clusters remain within national boundaries.

Continued operations under DDIL: Cluster peering additionally provides resilient operations in DDIL environments. This is a critical requirement especially when applied to public sector and even more in the Federal and DoD space. When connectivity and or networking is available, cluster peering is maintained and services are able to be shared while operations continue even when disconnected. This can be extended to quickly peering with mission partners during time of need and safely disconnecting when no longer necessary.

These requirements can be met by connecting or federating multiple Consul data centers, forming Consul multi-cluster architectures.

Cluster peering

As organizations scale, they may need to deploy services across multiple cloud providers and regions. Cluster peering enables the connection of multiple Consul clusters, allowing services in one cluster to securely discover and communicate with services in another.

Consul supports several approaches to connect clusters, broadly categorized into—

- WAN federation

- Cluster peering

For flexibility, reduced complexity, and ease of management, HashiCorp recommends the Consul cluster peering approach. Cluster peering reduces administrative burdens compared to WAN federation. With cluster peering, there is no concept of a primary cluster—which clearly separates administrative boundaries per cluster—ensuring changes in one Consul cluster do not affect peered clusters.

Implementing multi-cluster with Consul cluster peering

Design

Ensure appropriate networking configuration so all Consul clusters are reachable. In AWS, connecting VPCs via AWS Transit Gateway is the recommended approach.

Configure Consul mesh gateways in each Consul data center. These gateways will facilitate communication between Consul data centers via the Transit Gateway.

For resiliency, configure multiple Consul mesh gateways, preferably through an auto scaling group.

Deployment

- Refer to the hands-on tutorial “Connect services between Consul datacenters with cluster peering” for detailed instructions on deploying Consul cluster peering in Kubernetes.

Recommended architecture

References

Conclusion

Consul offers robust multi-tenancy features that facilitate the design and deployment of a self-service Consul platform, ensuring high resilience and scalability.